Why DevSecOps?

The Benefits of Containers

Container Adoption

Container Security Considerations

Mission-critical information for the most technical people on the planet.

© 2019 Linux Journal. All rights reserved.

This site/publication contains materials that have been created, developed or commissioned by, and published with the permission of, Linux Journal (the "Materials"), and this site and any such Materials are protected by international copyright and trademark laws.

THE MATERIALS ARE PROVIDED "AS IS" WITHOUT WARRANTY OF ANY KIND, EITHER EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE, TITLE AND NON-INFRINGEMENT. The Materials are subject to change without notice and do not represent a commitment on the part of Linux Journal or its Web site sponsors. In no event shall Linux Journal or its sponsors be held liable for technical or editorial errors or omissions contained in the Materials, including without limitation, for any direct, indirect, incidental, special, exemplary or consequential damages whatsoever resulting from the use of any information contained in the Materials.

No part of the Materials (including but not limited to the text, images, audio and/or video) may be copied, reproduced, republished, uploaded, posted, transmitted or distributed in any way, in whole or in part, except as permitted under Sections 107 & 108 of the 1976 United States Copyright Act, without the express written consent of the publisher. One copy may be downloaded for your personal, noncommercial use on a single computer. In connection with such use, you may not modify or obscure any copyright or other proprietary notice.

The Materials may contain trademarks, services marks and logos that are the property of third parties. You are not permitted to use these trademarks, services marks or logos without prior written consent of such third parties.

Linux Journal and the Linux Journal logo are registered in the US Patent & Trademark Office. All other product or service names are the property of their respective owners. If you have any questions about these terms, or if you would like information about licensing materials from Linux Journal, please contact us via e-mail at info@linuxjournal.com.

Palo Alto Networks' mission is to protect our way of life in the digital age by preventing cyberattacks with our pioneering Security Operating Platform, providing highly effective cybersecurity in the cloud, across networks, and for mobile devices.

In the beginning—streamlining both Software Development and IT Operations—came DevOps. It merged two extremely important roles to deliver software effectively and efficiently. The DevOps role shortened the development lifecycle to deliver vital bug fixes, software updates and much needed features quickly. DevOps reduced the bottleneck of the entire process with the exception of one key component: security.

DevSecOps expands beyond the practice of DevOps by introducing the practice and mindset of security into the process. Its primary goal is to distribute security decisions safely at the necessary speed and scale while not sacrificing the required security. Remember, DevOps is centered around development and operations. If you need to take advantage of both the agility and responsiveness that DevOps offers, security needs to play a role in the software lifecycle.

Typically, security becomes an afterthought in the software development/delivery lifecycle, and it's often pushed off to the final stages of the process. Before the DevOps concept emerged, when the entire process consumed many months to even years, this was not considered problematic. Now that more companies have adopted Continuous Delivery/Continuous Integration (CD/CI) models, releases tend to occur a lot more frequently. I'm talking about weeks, if not days, before a new revision of an application drops into the public domain. Waiting until the very last minute to ensure that the application is safe and secure to deploy destroys the entire process and potentially could derail the delivery of the application. What could have been a few weeks, might end up being a few months of development, testing and integration.

What does DevSecOps look like? Basically with DevSecOps, security is designed into the application or feature at the onset of the process. A good strategy is to determine risk tolerance and conduct a risk analysis of a given feature. How much security are you willing to provide the feature? And how consistent are you going to be with that requirement throughout the lifecycle of that same feature? Now, what happens when you scale that model across multiple features, sometimes being worked on simultaneously? Automation certainly will help out a lot here. Ideally, this automation would maintain short and frequent development models while also integrating your security measures with minimal to no disruptions to your operations.

DevSecOps introduces many other advantages, including but not limited to the following:

DevSecOps is a critical component in markets where software updates already are performed multiple times a day. Older security models just cannot keep up.

The six most important components that make up the DevSecOps approach are:

Some may argue that the "security" piece is nothing more than a mindset or philosophy. Even if that were the case, a large part of the challenge is identifying risks early on and using the right tools to guide you through the entire process—from the very beginning to the very end.

Containers and container technologies have redefined the way many organizations conduct business. The technology brings unprecedented agility and scalability. It should come as no surprise that container technologies are widely adopted and continue to thrive in the wild. They even form the foundations to many of the cloud native, mobile and cross-platform applications that we take for granted today. Knowing this, it does raise the question, how can you be sure that each deployment is safe and secured?

To recap, containers decouple software applications or services (often referred to as microservices) from the operating system, which gives users a clean and minimal Linux environment while running the desired application(s) in one or more isolated "containers". Containers were and still are an ideal technology for the ability to isolate processes within a respective container. This process isolation prevents a misbehaving application in one container from affecting processes running in another container. Also, containerized services are designed not to influence or disturb the host machine.

Another key feature of containers is portability. This is typically accomplished by abstracting away the networking, storage and operating system details from the application, resulting in a truly configuration-independent application, guaranteeing that the application's environment always will remain the same, regardless of the machine on which it is enabled. With an orchestration framework behind it, one or more container images can be deployed simultaneously and at scale.

Containers are designed to benefit both developers and system administrators. The technology has made itself an integral part of many DevOps toolchains. Developers can focus on writing code without having to worry about the system ultimately hosting it. There is no need to install and configure complex databases or worry about switching between incompatible language toolchain versions. Containers streamline software delivery and give the operations staff flexibility, often reducing the number of physical systems needed to host some of the smaller and more basic applications.

The beauty of containers is that they are completely platform-agnostic. As a result of their portability, they can be deployed on-premises in local data centers or out in the cloud. Under the same management framework, they can be managed and monitored seamlessly across both hybrid and multi-cloud environments. You even can run containers within virtual machines or serverless in cloud native applications. The possibilities are endless.

According to a 2018 survey conducted by the Gartner research firm, by the year 2020, more than 50% of the IT organizations that were surveyed will be using container technologies. This is up from less than 20% in the 2017 survey. Without a doubt these and many other organizations are seeing the value in using containers.

In addition, a 2017 Forrester report, "Containers: Real Adoption and Use Cases in 2017", commissioned by Dell EMC, Intel and Red Hat, revealed that of the 195 US/European managers or IT decision-makers responsible for public/private cloud decisions surveyed, at least 63% used containers with more than 100 instances deployed. That number was projected to grow in the coming years. The very same survey listed "security" as the number one roadblock to container technology deployment (37%).

Think about it. A "build once, run everywhere" application can be affected (alongside the many other container applications) by an infected or vulnerable kernel hosting it. It also can be affected by the applications and libraries it's packaged with. This would not be the case in a virtual machine, as the application would be fully isolated from the other(s). And now that more workloads have moved to the cloud, where organizations have less control over the system(s) hosting their containers and cloud native applications, this becomes more of a risk.

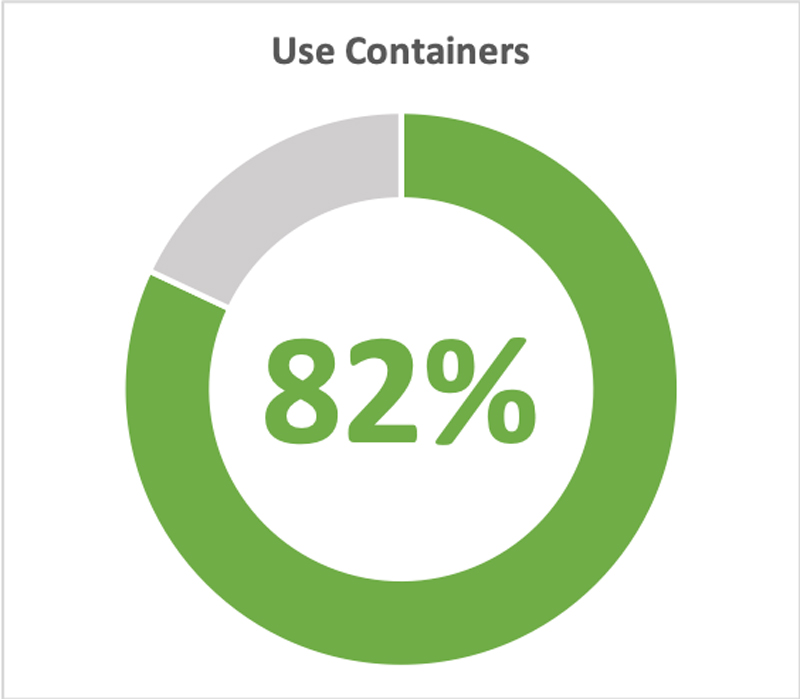

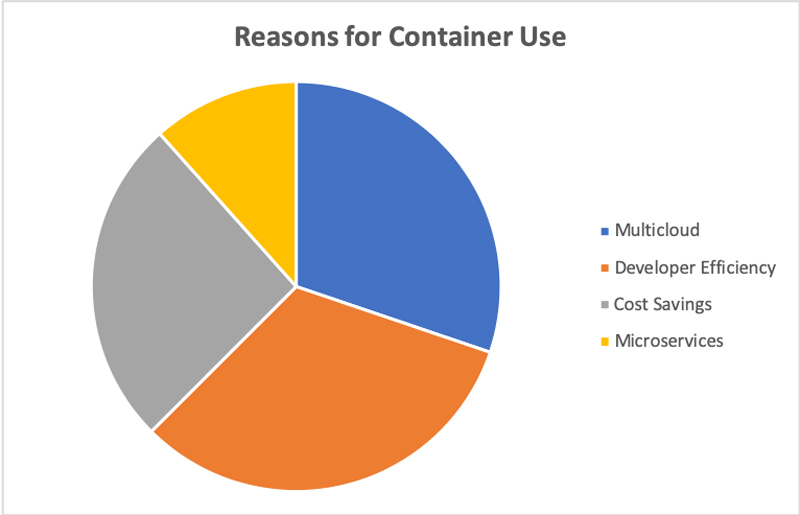

What's driving this adoption? A Portworx "2018 Container Adoption Survey" may provide the answer. Out of the 519 IT professionals that were surveyed, nearly 82% were already running container technologies, and 84% of those who were running them were running them in production. And of those, 30.2% claimed it was to enable their applications to run on multiple cloud platforms and to avoid vendor lock-in. The rest stated that it was to increase developer efficiency (32.3%), save on their infrastructure costs (25.9%) and support microservices architectures (11.6%).

Figure 1. IT Professionals Already Using Container Technologies

Figure 2. Reasons for Using Containers

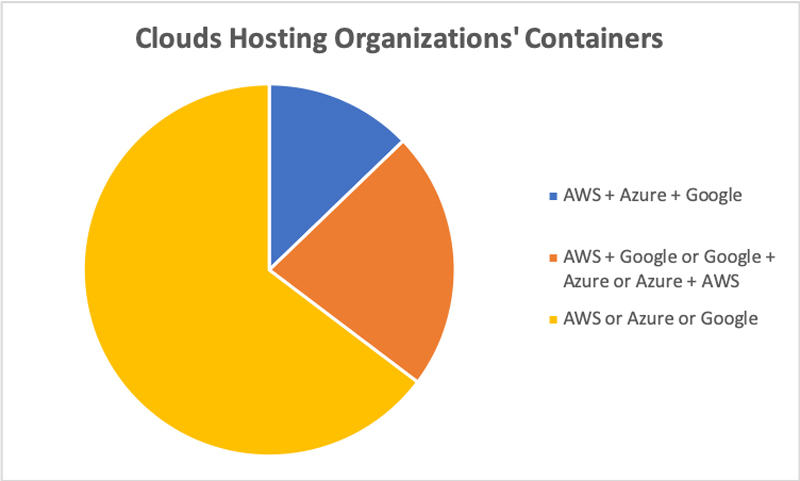

For those hosting their containers in the cloud, 12.8% were running them in three separate clouds (Google + Azure + AWS), while 22.5% were running them in two clouds (AWS + Google, Google + Azure or Azure + AWS).

Figure 3. Single or Multicloud Deployments of Containers

Although container technologies bring an added layer of security for running applications in an isolated environment, containers alone are not an alternative to taking proper security measures. Unlike traditional hypervisors, a container can have a more direct path to the host operating system's kernel, which is why it is standard procedure to drop privileges as quickly as possible and run all the services as non-root wherever possible. Also, note that whenever a containerized process requires access to the underlying filesystem, you should make it a good habit of mounting that filesystem as read-only.

The state of a container image also raises concern, which is why it's improper to run containers (be it Docker or anything else) from an untrusted source. When deploying an unknown or unofficial image, you increase the risk of running vulnerable or buggy code in your environment. And if that container is configured to host a privileged process, any attack exposing a potential vulnerability eventually could cost the data center an entire host system (and maybe more).

There also exists the potential for a container to run system binaries that it probably shouldn't be touching in the first place, at least without your knowledge. Another similar scenario is when a rogue application or attacker gains container access through an application vulnerability and replaces some of the underlying system binaries with one that does not belong or was not intended to run in that container image—all of which will continue to run during the life of that container. This can result in additional system and network compromises or worse.

Having the right tools to enable the Sec in DevSecOps will go a long way and can potentially save your firm or your customer tons of hours of headache (and downtime), and in turn, lots of dollars to repair the damage done. Damage is not confined only to software or data. It can also destroy reputation.

Costs are one of the key factors to container adoption. At least, that's what 37% of respondents stated according to the Survata "Container Adoption and Drivers" survey conducted in 2016. Another 21% cited the increase in frequency of software releases. Regardless of how you look at it, the main takeaway is a decrease in spending and increase in profit.

Clearly, if it were not for the many benefits, industries would not be deploying container technologies. Such benefits cited in the same survey include improved flexibility for IT infrastructure (63%), overall IT cost savings (53%), increased speed/productivity for developers deploying code (52%), greater responsiveness to business needs (40%) and more ROI from the cloud (38%).

At the time, two-thirds of IT professionals expected their company to save at least 16% on IT costs by using containers, while one-fifth indicated that their savings would exceed 30%.

At the end of the day, the initial investment into building a container-friendly infrastructure can be quite expensive. Costs include the following:

I'm talking about an up-front Capex with an ongoing Opex here, much like any other technology deployment inside the data center. Either way, it's important to assess the ROI for these Capex and Opex charges.

Digging deeper into the key aspects of a container-friendly infrastructure, you need to consider the following aspects of containers:

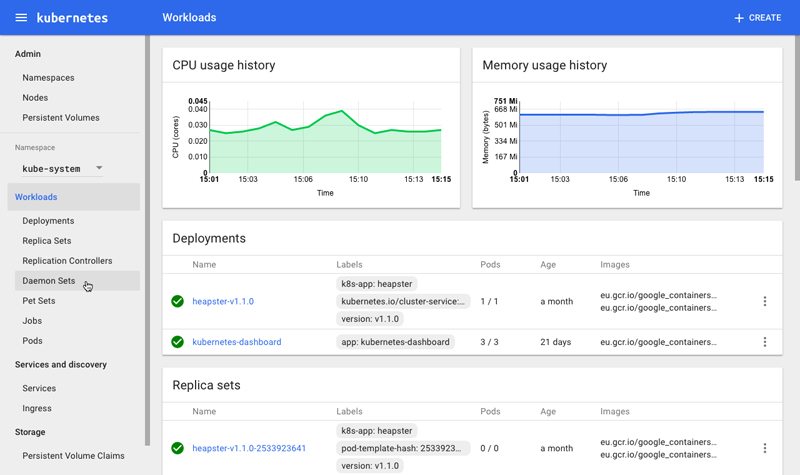

Figure 4. The Kubernetes Web UI Dashboard Source: kubernetes.io

Once you make all the investments to define, implement, secure and support a container-ready infrastructure properly, ROI will immediately follow. The amount of time it takes for a return on that investment is reduced further once you enable the security piece of DevSecOps. It's simple. The less resources spent on investigating, debugging and addressing production code, the less money spent in general.

Now, what do you look for to add security to your DevOps ecosystem? You'll need a product that focuses on container security across an application's lifecycle—one that's fully committed to providing enterprise security with DevSecOps agility and able to integrate with any modern CI tool or registry. It also should be designed to be deployed alongside your virtual machines, containers and cloud native applications.

I'm talking about an end-to-end security solution built for containerized environments that protects against software exploits, malware and active threats through its advanced intelligence and machine-learning capabilities. One that will profile expected container behavior automatically, and create and enforce security models at runtime. The goal would be to build security models of expected behavior and enforce them automatically via whitelisting. Ideally, security can be introduced much earlier in the development lifecycle to identify and block threats from developer workstations through to production.

The following are some key features to look for:

DevSecOps is a natural and necessary response to the bottleneck effect introduced by older security models layered on top of modern CD pipelines. Its goal is to bridge the gap between IT and security while also ensuring fast and safe delivery of code. It is meant to address the security concerns in every phase of the development lifecycle. As more organizations rely on containerized applications to keep operations up and running, security efforts outside traditional methods are crucial to prevent costly downtimes.