SO_REUSEPORT socket option. By Krishna

Kumar

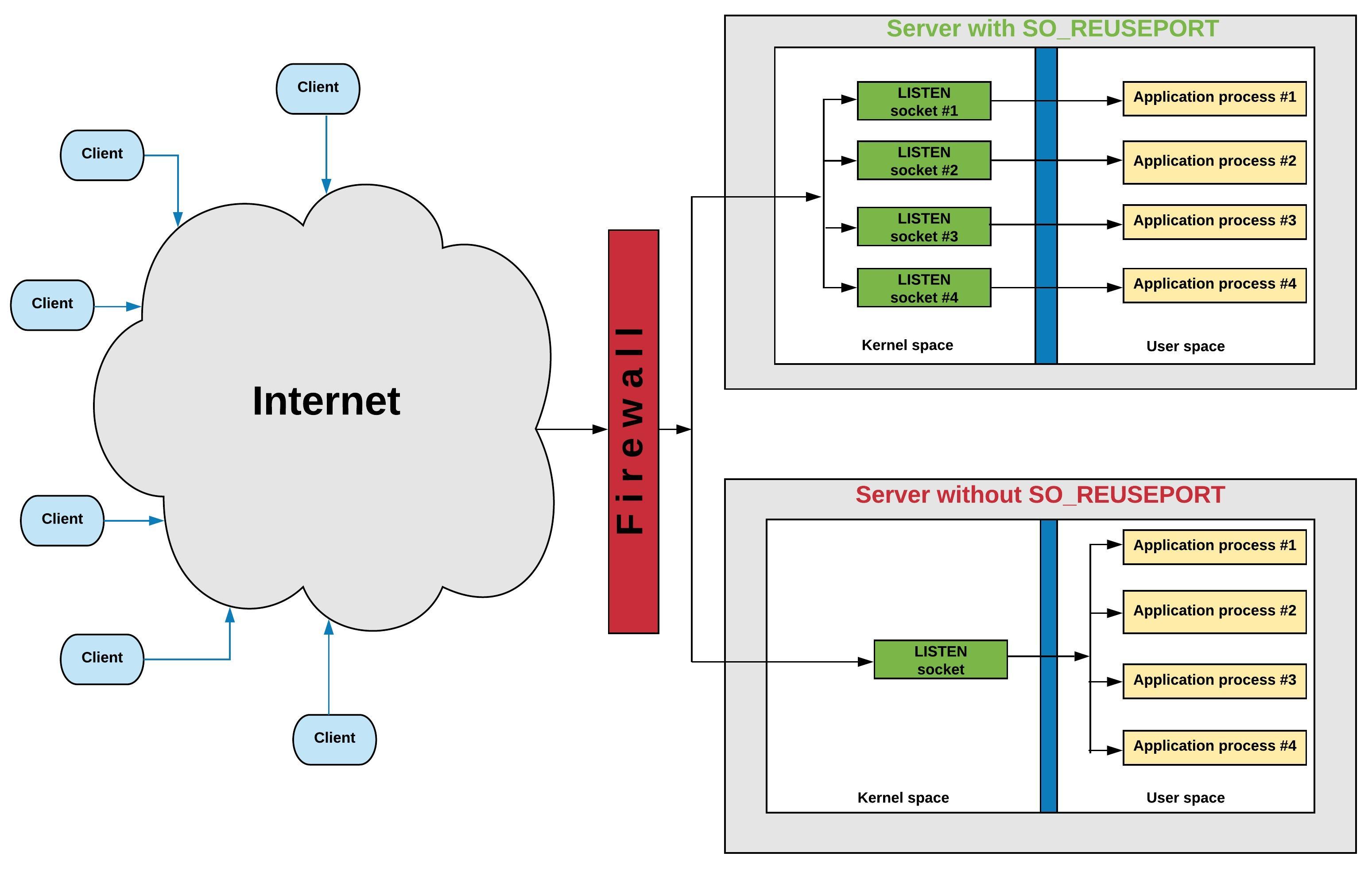

HAProxy and NGINX are some of the few applications that use the TCP

SO_REUSEPORT socket

option of the Linux networking stack. This option,

initially introduced in 4.4 BSD, is used to implement high-performance

servers that help better utilize today's large multicore systems. The

first few sections of this article explain some essential concepts of

TCP/IP sockets, and the remaining sections use that knowledge to describe

the rationale, usage and implementation of the SO_REUSEPORT socket option.

A TCP connection is defined by a unique 5-tuple:

[ Protocol, Source IP Address, Source Port, Destination IP Address, Destination Port ]

Individual tuple elements are specified in different ways by clients and servers. Let's take a look at how each tuple element is initialized.

Protocol: this field is initialized when the socket is created based on parameters provided by the application. The protocol is always TCP for the purposes of this article. For example:

socket(AF_INET, SOCK_STREAM, 0); /* create a TCP socket */

Source IP address and port: these are usually set by the kernel when the

application calls connect() without a prior invocation to

bind(). The kernel

picks a suitable IP address for communicating with the destination server

and a source port from the ephemeral port range (sysctl

net.ipv4.ip_local_port_range).

Destination IP address and port: these are set by the application by invoking

connect(). For example:

server.sin_family = AF_INET;

server.sin_port = htons(SERVER_PORT);

bcopy(server_ent->h_addr, &server.sin_addr.s_addr,

server_ent->h_length);

/* Connect to server, and set the socket's destination IP

* address and port# based on above parameters. Also, request

* the kernel to automatically set the Source IP and port# if

* the application did not call bind() prior to connect().

*/

connect(fd, (struct sockaddr *)&server, sizeof server);

Protocol: initialized in the same way as described for a client application.

Source IP address and port: set by the application when it

invokes bind(),

for example:

srv_addr.sin_family = AF_INET;

srv_addr.sin_addr.s_addr = INADDR_ANY;

srv_addr.sin_port = htons(SERVER_PORT);

bind(fd, &srv_addr, sizeof srv_addr);

Destination IP address and port: a client connects to a server by completing

the TCP

three-way handshake. The server's TCP/IP stack creates a new socket

to track the client connection and sets its Source IP:Port and Destination

IP:Port from the incoming client connection parameters. The new socket is

transitioned to the ESTABLISHED state, while the server's

LISTEN socket is

left unmodified. At this time, the server application's call to

accept() on

the LISTEN socket returns with a reference to the newly

ESTABLISHED socket.

See the listing at the end of this article for an example

implementation of client and server applications.

A TIME-WAIT

socket is created when an application closes its end of a

TCP connection first. This results in the initiation of a TCP four-way

handshake, during which the socket state changes from ESTABLISHED to

FIN-WAIT1 to FIN-WAIT2 to TIME-WAIT, before the socket is closed. The

TIME-WAIT state is a lingering state for protocol reasons. An application can

instruct the TCP/IP stack not to linger a connection by sending a TCP RST

packet. In doing so, the connection is terminated instantly without going

through the TCP four-way handshake. The following code fragment implements the

reset of a connection by specifying a socket linger time of zero seconds:

const struct linger opt = { .l_onoff = 1, .l_linger = 0 };

setsockopt(fd, SOL_SOCKET, SO_LINGER, &opt, sizeof opt);

close(fd);

A server typically executes the following system calls at start up:

1) Create a socket:

server_fd = socket(...);

2) Bind to a well known IP address and port number:

ret = bind(server_fd, ...);

3) Mark the socket as passive by changing its state to LISTEN:

ret = listen(server_fd, ...);

4) Wait for a client to connect and get a reference file descriptor:

client_fd = accept(server_fd, ...);

Any new socket, created via socket() or accept() system calls, is tracked in

the kernel using a "struct sock"

structure. In the code fragment above, a

socket is created in step #1 and given a well known address in step #2. This

socket is transitioned to the LISTEN state in step #3. Step #4 calls

accept(), which blocks until a client connects to this IP:port. After the

client completes the TCP three-way handshake, the kernel creates a second socket and

returns a reference to this socket. The state of the new socket is set to

ESTABLISHED, while the server_fd socket remains in a

LISTEN state.

Let's look at two use cases to better understand the SO_REUSEADDR

option for TCP sockets.

Use case #1: a server application restarts in two steps, an exit followed by

a start up. During the exit, the server's LISTEN socket is closed immediately.

Two situations can arise due to the presence of

existing connections to the server:

TIME-WAIT state.

ESTABLISHED state.

When the server is subsequently started up, its attempt to bind to its

LISTEN port fails with EADDRINUSE, because some sockets on the system are

already bound to this IP:port combination (for example, a socket in either

the TIME-WAIT or ESTABLISHED state). Here's a

demonstration of this problem:

# Server is listening on port #45000.

$ ss -tan | grep :45000

LISTEN 0 1 10.20.1.1:45000 *:*

# A client connects to the server using its source

# port 54762. A new socket is created and is seen

# in the ESTABLISHED state, along with the

# earlier LISTEN socket.

$ ss -tan | grep :45000

LISTEN 0 1 10.20.1.1:45000 *:*

ESTAB 0 0 10.20.1.1:45000 10.20.1.100:54762

# Kill the server application.

$ pkill -9 my_server

# Restart the server application.

$ ./my_server 45000

bind: Address already in use

# Find out why

$ ss -tan | grep :45000

TIME-WAIT 0 0 10.20.1.1:45000 10.20.1.100:54762

This listing shows that the earlier ESTABLISHED socket is the same one that

is now seen in the TIME-WAIT state. The presence of this socket bound to the

local address—10.20.1.1:45000—prevented the server from being able to

subsequently bind() to the same IP:port combination for its

LISTEN socket.

Use case #2: if two processes attempt to bind() to the same IP:port

combination, the process that executes bind() first succeeds, while the latter

fails with EADDRINUSE. Another instance of this use case involves an

application binding to a specific IP:port (for example, 192.168.100.1:80),

and another application attempting to bind to the wildcard IP address with

the same port number (for example, 0.0.0.0:80) or vice versa. The latter

bind() invocation fails, as it attempts to bind to all addresses with the same

port number that was used by the first process. If both processes set the

SO_REUSEADDR option on their sockets, both sockets can be bound successfully.

However, note this caveat: if the first process calls bind() and

listen(),

the second process still would be unable to bind() successfully, since the

first socket is in the LISTEN state. Hence, this use case is usually meant for

clients that want to bind to a specific IP:port before connecting to

different services.

How does SO_REUSEADDR help solve this problem? When the server is restarted

and invokes bind() on a socket with

SO_REUSEADDR set, the kernel ignores all

non-LISTEN sockets bound to the same IP:port combination. The

UNIX Network Programming book describes

this feature as:

"SO_REUSEADDR allows a listening server to start and bind its well-known

port, even if previously established connections exist that use this port as

their local port".

However, we need the SO_REUSEPORT option to allow two or more processes to

invoke listen() on the same port successfully. I describe this option

in more detail in the remaining sections.

While SO_REUSEADDR allows sockets to bind() to the same IP:port combination

when existing ESTABLISHED or TIME-WAIT sockets may be

present, SO_REUSEPORT

allows binding to the same IP:port when existing LISTEN sockets

also may be

present. The kernel ignores all sockets, including sockets in the

LISTEN state,

when an application invokes bind() or listen() on a

socket with SO_REUSEPORT

enabled. This permits a server process to be invoked multiple times, allowing

many processes to listen for connections. The next section examines the

kernel implementation SO_REUSEPORT.

When multiple sockets are in the LISTEN state, how does the kernel decide which

socket—and, thus, which application process—receives an incoming

connection? Is this determined using a round-robin, least-connection, random

or some other method? Let's take a deeper look into the TCP/IP code to

understand how socket selection is performed.

Notes:

sk represents a kernel socket data structure of type "struct sock".

skb, or the socket buffer, represents a network packet of type "struct

sk_buff".

src_addr, src_port and dst_addr,

dst_port refers to source IP:port and

destination IP:port, respectively.

As an incoming packet (skb) moves up the TCP/IP stack, the IP subsystem calls

into the TCP packet receive handler, tcp_v4_rcv(), providing the

skb as

argument. tcp_v4_rcv() attempts to locate a socket associated with

this skb:

sk = __inet_lookup_skb(&tcp_hashinfo, skb, src_port, dst_port);

tcp_hashinfo is a global variable of type struct

inet_hashinfo, containing,

among others, two hash tables of ESTABLISHED and

LISTEN sockets, respectively.

The LISTEN hash table is sized to 32 buckets, as shown below:

#define LHTABLE_SIZE 32 /* Yes, this really is all you need */

struct inet_hashinfo {

/* Hash table for fully established sockets */

struct inet_ehash_bucket *ehash;

/* Hash table for LISTEN sockets */

struct inet_listen_hashbucket listening_hash[LHTABLE_SIZE];

};

struct inet_hashinfo tcp_hashinfo;

__inet_lookup_skb() extracts the source and destination IP addresses from the

incoming skb and passes these along with the source and destination ports to

__inet_lookup() to find the associated ESTABLISHED or

LISTEN socket, as shown

here:

struct sock *__inet_lookup_skb(tcp_hashinfo, skb,

↪src_port, dst_port)

{

/* Get the IPv4 header to know

* the source and destination IPs */

const struct iphdr *iph = ip_hdr(skb);

/*

* Look up the incoming skb in tcp_hashinfo using the

* [ Source-IP:Port, Destination-IP:Port ] tuple.

*/

return __inet_lookup(tcp_hashinfo, skb, iph->saddr,

↪src_port, iph->daddr, dst_port);

}

__inet_lookup() looks in tcp_hashinfo->ehash for an already established

socket matching the client four-tuple parameters. In the absence of an

established socket, it looks in tcp_hashinfo->listening_hash for a

LISTEN

socket:

struct sock *__inet_lookup(tcp_hashinfo, skb, src_addr,

↪src_port, dst_addr, dst_port)

{

/* Convert dest_port# from network to host byte order */

u16 hnum = ntohs(dst_port);

/* First look for an established socket ... */

sk = __inet_lookup_established(tcp_hashinfo, src_addr,

↪src_port, dst_addr, hnum);

if (sk)

return sk;

/* failing which, look for a LISTEN socket */

return __inet_lookup_listener(tcp_hashinfo, skb, src_addr,

src_port, dst_addr, hnum);

}

The __inet_lookup_listener() function implements the selection of

a LISTEN

socket:

struct sock *__inet_lookup_listener(tcp_hashinfo, skb,

↪src_addr, src_port, dst_addr, dst_port)

{

/*

* Use the destination port# to calculate a hash table

* slot# of the listen socket. inet_lhashfn() returns

* a number between 0

* and LHTABLE_SIZE-1 (both inclusive).

*/

unsigned int hash = inet_lhashfn(dst_port);

/* Use this slot# to index the global LISTEN hash table */

struct inet_listen_hashbucket *ilb =

↪tcp_hashinfo->listening_hash[hash];

/* Track best matching LISTEN socket

* so far and its "score" */

struct sock *result = NULL, *sk;

int hi_score = 0;

for each socket, 'sk', in the selected hash bucket, 'ilb' {

/*

* Calculate the "score" of this LISTEN socket (sk)

* against the incoming skb. Score is computed on

* some parameters, such as exact destination port#,

* destination IP address exact match (as against

* matching INADDR_ANY, for example),

* with each criteria getting a different weight.

*/

score = compute_score(sk, dst_port, dst_addr);

if (score > hi_score) {

/* Highest score - best matched socket till now */

if (sk->sk_reuseport) {

/*

* sk has SO_REUSEPORT feature enabled. Call

* inet_ehashfn() with dest_addr, dest_port,

* src_addr and src_port to compute a

* 2nd hash, phash.

*/

phash = inet_ehashfn(dst_addr, dst_port,

src_addr, src_port);

/* Select a socket from sk's SO_REUSEPORT group

* using 'phash'.

*/

result = reuseport_select_sock(sk, phash);

if (result)

return result;

}

/* Update new best socket and its score */

result = sk;

hi_score = score;

}

}

return result;

}

Selecting a socket from the SO_REUSEPORT group is done with

reuseport_select_sock():

struct sock *reuseport_select_sock(struct sock *sk,

unsigned int phash)

{

/* Get control block of sockets

* in this SO_REUSEPORT group */

struct sock_reuseport *reuse = sk->sk_reuseport_cb;

/* Get count of sockets in the group */

int num_socks = reuse->num_socks;

/* Calculate value between 0 and 'num_socks-1'

* (both inclusive) */

unsigned int index = reciprocal_scale(phash, num_socks);

/* Index into the SO_REUSEPORT group using this index */

return reuse->socks[index];

}

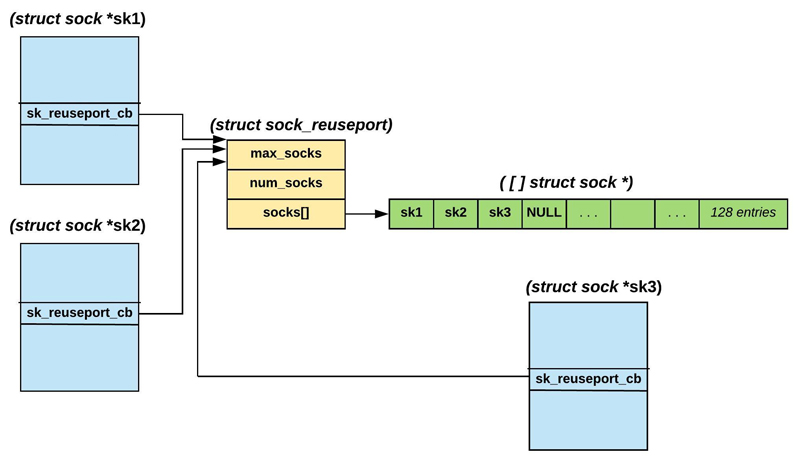

Let's step back a little to understand how this works. When the first

process invoked listen() on a socket with

SO_REUSEPORT enabled, a pointer in

its "struct sock" structure, sk_reuseport_cb, is allocated. This structure

is defined as:

struct sock_reuseport {

u16 max_socks; /* Allocated size of socks[] array */

u16 num_socks; /* #Elements in socks[] */

struct sock *socks[0]; /* All sockets added to this group */

};

The last element of this structure is a "flexible array

member". The

entire structure is allocated such that the socks[] array has 128 elements of

type struct sock *. Note that as the number of listeners increases beyond

128, this structure is reallocated such that the socks[] array size is

doubled.

The first socket, sk1, that invoked listen(), is saved in the first slot of

its own socks[] array, for example:

sk1->sk_reuseport_cb->socks[0] = sk1;

When listen() is subsequently invoked on other sockets

(sk2, ...) bound to

the same IP:port, two operations are performed:

sk2, ...) is appended to the

sk_reuseport_cb->socks[] of the first socket (sk1).

sk_reuseport_cb pointer is made to point to the first

socket's sk_reuseport_cb pointer. This ensures that all

LISTEN sockets of the

same group reference the same sk_reuseport_cb pointer.

Figure 1 shows the result of these two steps.

Figure 1. Representation of the SO_REUSEPORT Group of

LISTEN Sockets

In Figure 1, sk1 is the first LISTEN socket, and

sk2 and sk3 are sockets

that invoked listen() subsequently. The two steps described above are

performed in the following code snippet and executed via the

listen() call

chain:

static int inet_reuseport_add_sock(struct sock *new_sk)

{

/*

* First check if another identical LISTEN socket, prev_sk,

* exists. ... Then do the following:

*/

if (prev_sk) {

/*

* Not the first listener - do the following:

* - Grow prev_sk->sk_reuseport_cb structure if required.

* - Save new_sk socket pointer in prev_sk's socks[].

* prev_sk->sk_reuseport_cb->socks[num_socks] = new_sk;

* - prev_sk->sk_reuseport_cb->num_socks++;

* - Pointer assignment of the control block:

* new_sk->sk_reuseport_cb = prev_sk->sk_reuseport_cb;

*/

return reuseport_add_sock(new_sk, prev_sk);

}

/*

* First listener - do the following:

* - allocate new_sk->sk_reuseport_cb to contain 128 socks[]

* - new_sk->sk_reuseport_cb->max_socks = 128;

* - new_sk->sk_reuseport_cb->socks[0] = new_sk;

* - new_sk->sk_reuseport_cb->numsocks = 1;

*/

return reuseport_alloc(new_sk);

}

Now let's go back to reuseport_select_sock() to see how a

LISTEN socket is

selected. The socks[] array is indexed via a call to

reciprocal_scale() as

follows:

unsigned int index = reciprocal_scale(phash, num_socks);

return reuse->socks[index];

reciprocal_scale() is an optimized function that implements a pseudo-modulo operation using multiply and shift operations.

As shown earlier, phash was calculated in

__inet_lookup_listener():

phash = inet_ehashfn(dst_addr, dst_port, src_addr, src_port);

And, num_socks is the number of sockets in the

socks[] array. The function

reciprocal_scale(phash, num_socks) calculates an index, 0 <=

index <

num_socks. This index is used to retrieve a socket from the

SO_REUSEPORT

socket group.

Hence, you can see that the kernel selects a socket by hashing the client IP:port

and server IP:port values. This method provides a good distribution of

connections among different LISTEN sockets.

Let's look at the effect of SO_REUSEPORT on the command line through two tests.

1) An application opens a socket for listen and creates two processes.

Application code path: socket(); bind(); listen(); fork();

$ ss -tlnpe | grep :45000

LISTEN 0 128 *:45000 *:* users:(("my_server",

↪pid=3020,fd=3),("my_server",pid=3019,fd=3))

↪ino:3854904087 sk:37d5a0

The string ino:3854904087 sk:37d5a0 describes a single kernel socket.

2) An application creates two processes, and each creates a LISTEN

socket after setting SO_REUSEPORT.

Application code path: fork(); socket(); setsockopt(SO_REUSEPORT);

bind(); listen();

$ ss -tlnpe | grep :45000

LISTEN 0 128 *:45000 *:* users:(("my_server",

↪pid=1975,fd=3)) ino:3854935788 sk:37d59c

LISTEN 0 128 *:45000 *:* users:(("my_server",

↪pid=1974,fd=3)) ino:3854935786 sk:37d59d

Now you see two different kernel sockets—notice the different inode numbers.

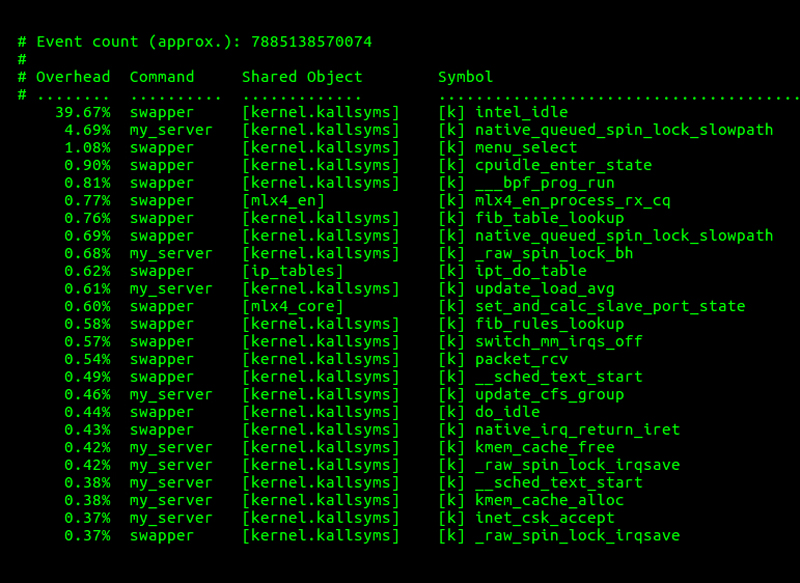

Applications using multiple processes to accept connections on a single

LISTEN socket may experience significant performance issues, since each

process contends for the same socket lock in accept(), as shown in the

following simplified pseudo-code:

struct sock *inet_csk_accept(struct sock *sk)

{

struct sock *newsk = NULL; /* client socket */

/* We need to make sure that this socket is listening, and

* that it has something pending.

*/

lock_sock(sk);

if (sk->sk_state == TCP_LISTEN)

if ("there are completed connections waiting

↪to be accepted")

newsk = get_first_connection(sk);

release_sock(sk);

return newsk;

}

Both lock_sock() and release_sock() internally acquires and releases a

spinlock embedded in sk. (See Figure 3 later in this article to observe the

overhead due to the spinlock contention.)

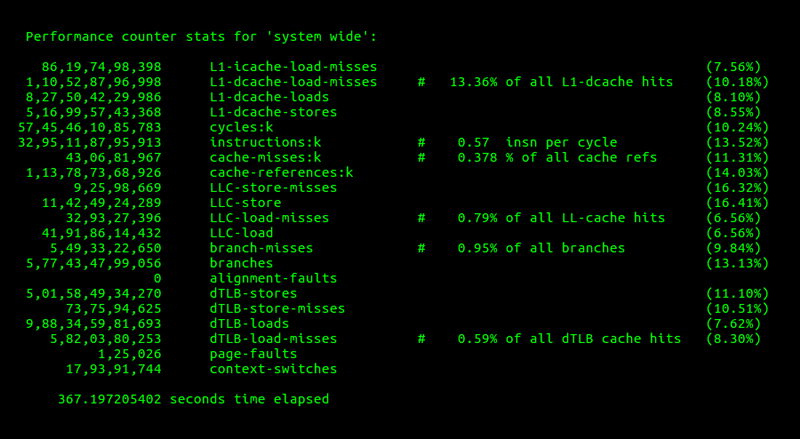

The following setup is used to measure SO_REUSEPORT performance:

LISTEN socket and fork 48 times, or

fork 48 times, and each child process creates a LISTEN socket after

enabling SO_REUSEPORT.

With the fork of the LISTEN socket:

server-system-$ ./my_server 45000 48 0 (0 indicates fork)

client-system-$ time ./my_client <server-ip> 45000 48 1000000

real 4m45.471s

With SO_REUSEPORT:

server-system-$ ./my_server 45000 48 1 (1 indicates

↪SO_REUSEPORT)

client-system-$ time ./my_client <server-ip> 45000 48 1000000

real 1m36.766s

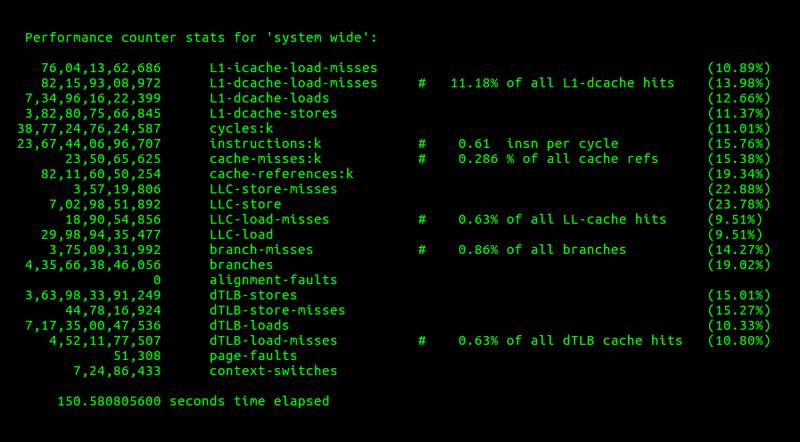

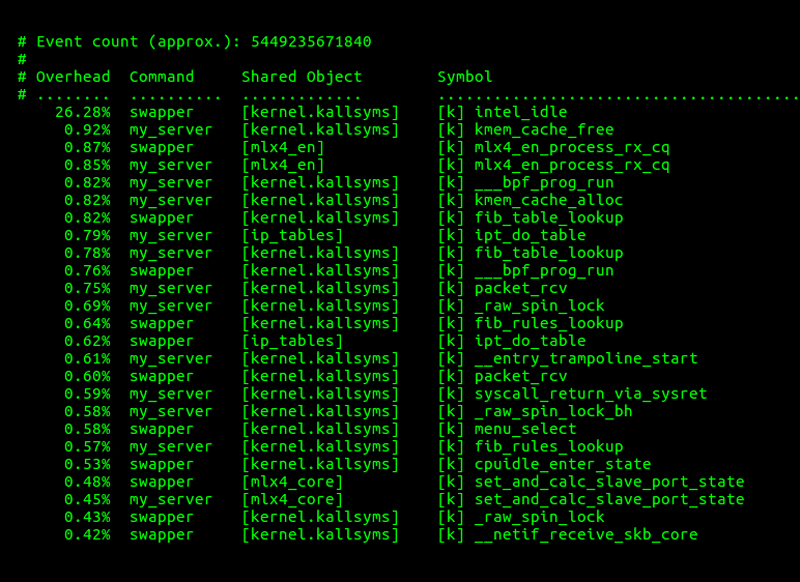

Figures 2–5 provide a look at the performance profile for the above two tests using

the perf tool.

Figure 2. Performance Counter Statistics without

SO_REUSEPORT

Figure 3. Performance Profile of the Top 25 Functions without

SO_REUSEPORT

Figure 4. Performance Counter Statistics with SO_REUSEPORT

Figure 5. Performance Profile of the Top 25 Functions with

SO_REUSEPORT

The listing below implements a server and client application that were used

for SO_REUSEPORT performance testing:

$ cat my_server.c

#include <stdio.h>

#include <stdlib.h>

#include <unistd.h>

#include <sys/wait.h>

#include <netdb.h>

void create_children(int nprocs, int parent_pid)

{

while (nprocs-- > 0) {

if (getpid() == parent_pid && fork() < 0)

exit(1);

}

}

int main(int argc, char *argv[])

{

int reuse_port, fd, cfd, nprocs, opt = 1, parent_pid =

↪getpid();

struct sockaddr_in server;

if (argc != 4) {

fprintf(stderr, "Port# #Procs {0->fork, or

↪1->SO_REUSEPORT}\n");

return 1;

}

nprocs = atoi(argv[2]);

reuse_port = atoi(argv[3]);

if (reuse_port) /* proper SO_REUSEPORT */

create_children(nprocs, parent_pid);

if ((fd = socket(AF_INET, SOCK_STREAM, 0)) < 0) {

perror("socket");

return 1;

}

if (reuse_port)

setsockopt(fd, SOL_SOCKET, SO_REUSEPORT, (char *)&opt,

sizeof opt);

server.sin_family = AF_INET;

server.sin_addr.s_addr = INADDR_ANY;

server.sin_port = htons(atoi(argv[1]));

if (bind(fd, (struct sockaddr *)&server, sizeof server)

↪< 0) {

perror("bind");

return 1;

}

if (!reuse_port) /* simple fork instead of SO_REUSEPORT */

create_children(nprocs, parent_pid);

if (parent_pid == getpid()) {

while (wait(NULL) != -1); /* wait for all children */

} else {

listen(fd, SOMAXCONN);

while (1) {

if ((cfd = accept(fd, NULL, NULL)) < 0) {

perror("accept");

return 1;

}

close(cfd);

}

}

return 0;

}

$ cat my_client.c

#include <stdio.h>

#include <stdlib.h>

#include <unistd.h>

#include <strings.h>

#include <sys/wait.h>

#include <netdb.h>

void create_children(int nprocs, int parent_pid)

{

void create_children(int nprocs, int parent_pid)

{

while (nprocs-- > 0) {

if (getpid() == parent_pid && fork() < 0)

exit(1);

}

}

int main(int argc, char *argv[])

{

int fd, count, nprocs, parent_pid = getpid();

struct sockaddr_in server;

struct hostent *server_ent;

const struct linger nolinger = { .l_onoff = 1,

↪.l_linger = 0 };

if (argc != 5) {

fprintf(stderr, "Server-IP Port# #Processes

↪#Conns_per_Proc\n");

return 1;

}

nprocs = atoi(argv[3]);

count = atoi(argv[4]);

if ((server_ent = gethostbyname(argv[1])) == NULL) {

perror("gethostbyname");

return 1;

}

bzero((char *)&server, sizeof server);

server.sin_family = AF_INET;

server.sin_port = htons(atoi(argv[2]));

bcopy((char *)server_ent->h_addr, (char *)

↪&server.sin_addr.s_addr,

server_ent->h_length);

create_children(nprocs, parent_pid);

if (getpid() == parent_pid) {

while (wait(NULL) != -1); /* wait for all children */

} else {

while (count-- > 0) {

if ((fd = socket(AF_INET, SOCK_STREAM, 0)) < 0) {

perror("socket");

return 1;

}

if (connect(fd, (struct sockaddr *)&server,

sizeof server) < 0) {

perror("connect");

return 1;

}

/* Reset connection to avoid TIME-WAIT state */

setsockopt(fd, SOL_SOCKET, SO_LINGER, &nolinger,

sizeof nolinger);

close(fd);

}

}

return 0;

}

Krishna Kumar works at Flipkart Internet Pvt Ltd, India's largest e-commerce company. He is especially interested in today's topic, as Flipkart uses this technology to host millions of connections from visitors all over the world. His other interests are playing chess, struggling to learn to use apps, and occasionally bringing stray puppies home much to his wife's consternation. Please send your comments and feedback to krishna.ku@flipkart.com.