Mycroft is a digital assistant along the lines of Google Home, Amazon Alexa and the many others that are currently coming onto the market. Unlike most of these though, it has two major differences:

Many assistants will listen all of the time, sending everything they hear into the "cloud". As you all know, many of these companies are vacuums for data, and their business models are built on using that data. Mycroft goes against that model by minimizing the amount of data collected and anonymizing the data when it has to interact with other systems.

Google processing is given in Table 1.

| Local Execution | Remote Execution |

| Recognise wakeup word | |

| Capture query | |

| Speech to text (recorded in your Google account) | |

| Match to intent | |

| Call matching skill | |

| Remote service if required by skill | |

| Generate response | |

| Text to speech |

Mycroft changes this around to what's shown in Table 2.

| Local Execution | Remote execution |

| Recognise wakeup word | |

| Capture query | |

| Speech to text (anonymous) | |

| Match to intent | |

| Call matching skill | |

| Remote service if required by skill | |

| Generate response | |

| Text to speech |

All processing is local except for the speech to text and text to speech modules, and possibly an external web service. The "speech to text" service is currently Google's Speech to Text service, but there is an option of using the Mozilla DeepSpeech engine. The requests are anonymized to be from "MycroftAI" rather than from a particular user. The "Remote service if required by skill" will depend on what needs to be done to satisfy the query—for example, getting the local time won't need one, while getting the weather will need access to a weather service, such as OpenWeatherMap. The "Text to speech" module, by default, uses a mechanical-sounding voice produced by software called Mimic 2, based on the Tacotron architecture, but the voice is configurable. However, there is a noticeable lag of several seconds while a voice response is prepared, and choosing a more complex voice increases this delay.

You can buy digital assistants as "black boxes". Google has created a do-it-yourself "white box" (Figure 1). It consists of the following:

Figure 1. Google AIY Voice Kit

The kit is driven by a Raspberry Pi 3, which you supply yourself.

You can download an image for the Raspberry Pi that can drive the voice hat, and there's a lengthy set of instructions to turn it into a Google Assistant. This is non-trivial, requiring a Google account and using OAuth for identification purposes. But I'm not going that route here, as this is a nice little box for installing Mycroft.

Mycroft requires a good quality microphone and reasonable speaker, which the Raspberry Pi doesn't have. The AIY kit has these as well as the little brown box in which to put everything. But, the AIY image doesn't include Mycroft, and the Raspberry Pi image for Mycroft—picroft—doesn't have drivers for the voice hat.

There are two ways if getting Mycroft onto the Raspberry Pi you use with the AIY kit: add Mycroft to the AIY image or install AIY support onto the picroft image.

The first is straightforward but time-consuming: using the AIY image from here, download the Mycroft files from GitHub and build it on the Raspberry Pi. It's not hard.

The second is slightly less time-consuming: the Raspberry Pi image from September 2018 allows you to select the AIY devices during setup, and then downloads and builds all the relevant AIY files. At the time of this writing, Google has broken this by not having a particular Python "wheel" file available for the Raspberry Pi 3B or 3B+, but there is a workaround from andlo here. Hopefully this will be fixed soon—the AIY forums are very voluble on the subject!

To build this kit, follow the AIY instructions to connect everything together. But, don't fold them all into the cardboard enclosure yet, as that hides the USB ports and the HDMI port. Connect an external monitor, keyboard and mouse, so you can control the system while you build it. From then on, follow these steps:

Once booted, you can run Mycroft from the directory mycroft-core using the

start-mycroft.sh command. This can take parameters, and the most voluble way of

seeing what's going on is to run it in debug mode:

start-mycroft.sh debug

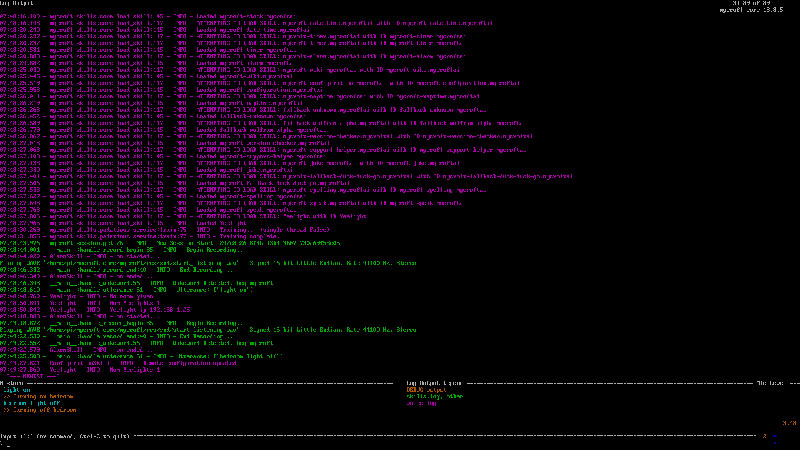

This brings up the curses screen shown in Figure 2.

Figure 2. Screenshot of Mycroft in debug mode—this shows the skills loaded at the top and the interactions with the user at the bottom.

Mycroft has an ever-growing list of skills that come by default. These include Pandora, Spotify, OpenHAB and Wikipedia. But there's always room for more! I've got some smart bulbs in my house, and I'd like to control them using Mycroft.

Adding a skill means you need to know how to talk to the service you're requesting. Often this information isn't publicly available and has to be reverse-engineered. Such is the case with the Lanbon light switches, for example, but that's another story! One device that is okay is the Yeelight smart light. It has the advantage of having a documented "local" API to control it, so there's no need to tell the Yeelight server what time you go to bed, for example. The Hue and LIFX lights offer similar privacy-respecting APIs.

The Mycroft skills are in the /opt/mycroft/skills directory. There is one directory per skill, and the following sub-directories and files:

The __init__.py file is where all the work of the skill is done. It needs to import

required classes and define a class that inherits from

MycroftSkill. This class

should have methods for each intent, which is triggered by the keywords for

that intent. This was formerly signaled by code in the constructor, but the

preference now is for an adornment on each method. The method then does what is

needed, and finally calls for a response to be sent.

I use the Yeelight bulbs here, as they are relatively simple, documented, and it's easy enough to show how to build a small skill—note that there are additional expectations of a real skill that would be distributed by Mycroft! Mycroft has a more detailed set of instructions at Developing a new Skill.

Yeelight makes a number of smart bulbs that you can control through an Android or iPhone app. Like many home IoT devices, it communicates with a (Yeelight) server, and to set it up, you have to go through the usual complex registration processes for smart-home devices. But, it also has a REST-like API through which you can control it with appropriate network calls. Typically, these would be restricted to the home LAN unless ports are explicitly opened on the home router (bad idea!). But if you just want to control the lights from inside the house, this is a good way. The "Yeelight WiFi Light Inter-Operation Specification" is described here.

The default is for LAN control to be disabled for each bulb. You have to enable the "Control LAN" mode, which is found by selecting a Yeelight device, scrolling down to the bottom of the screen and choosing the rightmost icon and then the Control LAN icon. The rather crude screenshot from Yeelight in Figure 3 shows this.

Figure 3. Enabling LAN API of the Yeelight

There are a number of different Yeelight bulbs. I look only at the common feature of on/off for each bulb in this article. The Mycroft files for such a skill are as follows:

After enabling LAN control, the Yeelight bulb listens to multicast

messages to 239.255.255.250 on port 1982. On receiving the correct message,

each bulb responds with a packet containing information such as "Location:

yeelight://192.168.1.25:55443". Several libraries have been

developed to do this and other Yeelight commands. I use the library by Stavros

Korokithakis available

here. This can be

installed by pip, but Mycroft will do it for you: the file requirements.txt

contains a list of the packages needed to run a skill, which will be loaded by

Mycroft, so here will contain the name of the Yeelight package:

yeelight

The package contains a method discover_bulbs(), which sends out the multicast

message and collects the responses as a list, each element containing the IP

address and port, and a list of the light's capabilities. A new

Bulb() can

then be created using the IP address. The simplest logic finds a list of bulbs

and returns the first in the list, along with its name:

def select_light(location):

yeelights = yeelight.discover_bulbs()

if len(yeelights) >= 1:

light_info = yeelights[0]

ip = light_info['ip']

name = light_info['capabilities']['name']

return light, name

return None, None

Once a light is found, commands can be sent to it. A command is in the form of

a JSON string, which is constructed representing the command and its parameters

and sent as a TCP packet to the bulb. These are encapsulated by the Yeelight

package by methods including turn_on() and

turn_off().

Finally, you can give the "on" command, which combines the decorator to register the intent, the command itself and the response. The response contains the light's name, if it is available, to show that parameters can be passed to the speech dialog:

@intent_handler(IntentBuilder("OnIntent").\

require("OnKeywords").build())

def on_activity_intent(self, message):

"""Turn on Yeelight

"""

light, name = select_light(location)

if light != None:

light.turn_on()

if name == None:

report = {"location": "unknown"}

else:

report = {"location": name}

self.speak_dialog("on.activity", report)

The (US English) intent matcher for switching the light on is in the file Yeelight/vocab/en-us/OnKeywords.voc:

light on

And the (US English) response is in the file Yeelight/dialog/en-us/on.activity.dialog:

turning on {{location}}

If the light's name is, say, "bedroom", Mycroft will say "turning on bedroom". There is considerably more complexity that can be built in to the intent matcher and responses, these are just indicative.

Just considering lighting, many options exist now. Let's look at a few of them.

LIFX

An API for both LAN and WAN management of devices is documented. The LAN interface uses UDP messages only, and the different devices are distinguished by their MAC address rather than IP address. The WAN API sends HTTP REST commands via the LIFX server. Several Python packages implement this, such as lifxlan for LAN control and pifx for WAN control. There is a Mycroft skill using the WAN API.

Hue

Hue lights do not live on the IP network, but on a Zigbee network. The interaction with the LAN is via a Hue bridge: you talk IP to the bridge, it talks Zigbee to the devices. A REST API allows you to send requests to a light by a request to the bridge including the light's ID. Many Python packages implement this, and there already is a Mycroft skill for these lights.

IKEA Trådfr

The IKEA lights also use Zigbee via a gateway. There is an API using CoAP over UDP, and it has been implemented in a Python package called pytradfri. Although there is no Mycroft skill for these lights, there is an OpenHAB binding and a Mycroft skill for OpenHAB.

Eufy Lumos

This is an IP bulb, but the API does not appear to have been published. There have been several attempts to reverse-engineer the API, and there is currently a minimal Python package lakeside.

Note: although the functions performed by each smart light are similar, there is little consistency in their networking APIs, and there are a variety of Python and other language implementations. Unfortunately, many vendors have not published their APIs. This is reminiscent of the early days of device drivers for Linux, where some vendors were good, and others stupidly thought themselves clever for hiding specifications. A vendor of one product has told me they will give me the API if I purchase 2,000 units at about $100 each—not on my salary!

There are multiple security issues to be considered in any IoT system, and several of them surface here.

First, there are security issues arising from switching the bulb to local access mode: it means that anyone on your local network (such as the disgruntled teenager you've just sent off early to bed) can program your lights to flash on and off just as you get to sleep. In the case of the Yeelight, that's a choice you get to make, as you have to change its mode explicitly. I'm assuming, of course, that your wireless network has security, such as WPA2 turned on, and any default router passwords have been changed—otherwise, you are vulnerable to any external attack!

You can reduce the security risks by running these lights on their own subnet, even on a different SSID with an appropriate firewall. This increases network configuration complexity as well as requiring a router that will pass multicast searches from the Mycroft subnet to the light's subnet. The IETF expects home networks to have multiple IP subnets in the future (at RFC 7368: IPv6 Home Networking Architecture Principles), so such issues will need to be addressed in user-friendly ways eventually.

Of course, you could say that this is all irrelevant, since anyone can just ask Mycroft to turn the lights on or off. Physical presence now clashes with network security! The Mycroft wake word recognizer cannot yet distinguish between different voices, but that's in the works.

Mycroft will continue to support the open-source version, and it's building up the skill sets. The company also makes its own hardware version, and version 2 will be released soon. This will have its own FPGA, which will allow more AI-style processing to be done onboard. So Linux hackers can play around to their hearts' content, and privacy-concerned individuals and companies can purchase an off-the-shelf version.

The main downside is the lack of specifications and stability for services and IoT devices. This is shown by Logitech in December 2018 turning off an undocumented LAN mode for the Harmony Hub due to security issues with its XMPP code. It caused an outcry from those relying on it for their home automation systems, and Logitech is now reconsidering its position—at least the company is listening; others are not!

Disclaimer: the author recently purchased shares in Mycroft.