The udev project appears to be in crisis. Kay Sievers has come under fire for failing to fix problems that have cropped up in the system, and it looks as though top kernel folks like Al Viro, not to mention Linus Torvalds, have been calling for someone else to take over the project.

The main issue is that user systems have been hanging. According to Kay, this is partly due to udev having a mysterious slowdown that he hasn't been able to fix yet. The slowdown results in certain driver requests being delayed until they time out, which apparently cause the appearance of a crash.

But Kay feels that the real problem is with the kernel's behavior, not with udev, and that the main kernel code should deal with it. Al and Linus (and the rest of the people complaining) argue that udev previously had been working, and that it was a patch to udev that resulted in the system crashes; therefore, udev either needed to fix the issue or revert the patch.

This hearkens back to the days when kernel folks blamed GCC for producing bad machine code, while the GCC folks blamed the kernel for using bad C code. One key difference is that unlike GCC, the udev code is actually part of the kernel and isn't an independent project.

It seems clear that if Kay can't fix the problem, or at least adopt better development practices, someone else will be asked to maintain udev. Greg Kroah-Hartman, one of the original udev authors, would be an obvious candidate, at least for the short term. But, he's pretty busy these days doing tons of other kernel work.

Recently, Linus Torvalds decided to simplify the cryptographic signature code for kernel modules. His initial motivation was to speed things up by migrating some of the time-consuming signing issues from compile time to install time where they would end up being faster.

This turned out to be slightly controversial. David Howells suggested that Linus should go even further and take out all the module-signing code and just let users do it manually. But, this ended up causing some unexpected blowback from Linus.

The issue Linus is concerned with is the ordinary user who wants to protect the system from root kits and other attacks. Requiring modules to be signed by a secure key is a good way to address that. But, he felt that David was concerned with allowing distribution vendors to keep a cryptographic stranglehold over what kind of software ordinary users could run on their systems.

There was a brief attempt recently to change the way “signed-off-by” reviews are submitted. Typically, whenever a patch gets sent into the kernel, it passes through a gauntlet of reviewers who confirm that the patch looks good, contains no proprietary code and so on. But, Al Viro pointed out that in a lot of cases, reviews show up in the mailing list, after the patch already has been accepted into the kernel. In that case, the sign-off doesn't get included. Al felt this was lost data, and he suggested changing the process, so that sign-offs could be added after the fact.

There actually was quite a bit of support for this idea, and it turned out that the latest versions of git already support it, via the git notes add command. But, although Linus Torvalds is fine with people using that sort of thing for local development, he said he wouldn't include after-the-fact sign-offs in the main tree. He just felt it wasn't that important. As long as someone signs off on the code, especially the author of the given patch, he's fine with not having the maximum number of sign-offs that he could get.

Considering that the signed-off-by process was created in direct response to the SCO lawsuits (en.wikipedia.org/wiki/SCO%E2%80%93Linux_controversies), he must be pretty confident that it's not an important issue. I believe at the time Linus was particularly inconvenienced, having to account for the origins and licenses of many kernel patches.

Anyone with an iPhone probably is familiar with the AirVideo application. Basically, it's the combination of a server app that runs on your Windows or OS X machine, and it serves video over the network to an AirVideo application on your phone. It's extremely popular, and for a good reason—it works amazingly well.

For a long time, there wasn't a good solution for the Android world, largely due to the way Android streamed video. Now, however, there is an incredible application for doing the exact same thing iOS users do with AirVideo. You've probably heard of Plex, but you may not know about the server/client combination it can do with Android.

Once you install the server application, which runs perfectly fine on a Linux server, you install the Plex application from the Google Play store, and your video collection follows you anywhere you have connectivity. The content is, of course, dependent on the content you have on your server, but the format in which your content is stored doesn't matter very much. Plex's server application does a great job of streaming most video formats and converting to an appropriate bandwidth on the fly.

Figure 2. The video quality adjusts for your current bandwidth and renders crisp video even on a large tablet display.

Plex may have started out as a Macintosh-compatible competitor to XBMC, but it's evolved into an incredible video-streaming system. With Plex, you can become your own Netflix! Due to its Linux compatibility and incredible video streaming ability, Plex is this month's Editors' Choice!

Before you say anything, yes, I know Wireshark is available for Linux. This time, however, Windows and OS X users get to play too. Wireshark is an open-source network analyzation tool that is really an amazing tool for troubleshooting a network.

Running Wireshark on OS X does require an X11 server (see my Non-Linux FOSS article in the December 2012 issue of LJ on XQuartz.) It also looks a bit dated once it's up and running, but rest assured, the latest version is functioning behind the scenes. If you're thinking this program looks a lot like Ethereal, you're absolutely correct. It's the same program, but six or so years ago the name changed.

Wireshark is strictly a wired-ethernet inspection tool, but if you're trying to solve a network issue, it's the de facto standard tool. It's not a new tool by any means, but if you're on a foreign operating system (that is, not Linux), it's nice to know some old standbys are available. Check it out today at www.wireshark.org.

System Administration is one of the most popular topics at LinuxJournal.com, and many of our readers have loads of experience in the field. We recently polled our on-line readers about their system administration habits, and we received some interesting answers, as usual.

We were surprised to learn that an almost equal number of you use a GUI or Web-based tool versus the command line, with 51% using the latter. And, on the command line, your preferred protocol is SSH by a wide margin with 87%. Telnet and remote serial console each received 6%, with 1% of you using something else entirely. 45% of you manage one server, while 15% manage more than 20, and more than a few of you are employed by hosting companies or companies with similar needs, so those numbers get pretty high.

We were not all that surprised to learn that vim was your favorite command-line text editor by far, with 74% of the votes, compared to nano/pico with 14% and emacs with 8%. The remaining 4% of you use something else, and among the other options was naturally “all of the above”.

61% of you are mostly running Ubuntu or Debian-based servers, and Red Hat is your second favorite (24%), while 7% are running Windows. The other 8% of you are running a variety of other operating systems including other flavors of Linux, Solaris, AIX or FreeBSD.

Security updates are a regular and necessary process, and 43% of you do them at least annually, while 12% apply security updates daily. We're relieved to know so many of you are on top of things. Non-security updates are also frequent with the majority or readers updating at least quarterly.

The full survey results are below for your perusal, and thanks again for always being willing to share with the class!

1) Do you do the majority of your system administration work from:

the command line: 51%

a GUI/Web-based tool: 49%

2) When accessing your servers via command line, do you use:

SSH: 87%

Telnet: 6%

remote serial console: 6%

Other: 1%

3) How many servers do you manage?

1: 45%

2–5: 20%

6–10: 10%

11–20: 10%

more than 20: 15%

4) Which command-line text editor is best?

vim: 74%

nano/pico: 14%

emacs: 8%

Other: 4%

5) Do you use a configuration management tool like puppet?

yes: 16%

no: 84%

6) Are most of your servers:

Ubuntu-/Debian-based: 61%

Red Hat-based: 24%

Windows: 7%

Other: 8%

7) How often do you apply security updates to your systems?

daily: 12%

weekly: 21%

monthly: 15%

quarterly: 9%

annually: 43%

8) How often do you apply non-security updates to your system?

daily: 7%

weekly: 18%

monthly: 17%

quarterly: 12%

annually: 46%

9) Have you ever delayed a kernel update in order to preserve your coveted uptime?

yes: 30%

no: 70%

10) Do you work on your server farm from home?

yes: 44%

no: 56%

11) If so, do you use a VPN?

yes: 65%

no: 35%

12) Does your server infrastructure include a DMZ?

yes: 52%

no: 48%

13) What percentage of your servers are virtualized?

0–25%: 43%

26–50%: 20%

51–75%: 17%

76–100%: 20%

14) If you use virtualization, what is your host environment?

VMware: 42%

Xen: 13%

KVM: 18%

Hyper-V: 3%

N/A: 12%

Other: 12%

15) Do you host e-mail:

locally: 55%

with a cloud host: 19%

we don't provide e-mail: 26%

16) Do you allow users VPN access into your network?

yes: 54%

no: 46%

17) Do you have Wi-Fi coverage at your workplace?

yes: 84%

no: 16%

18) If yes, do you allow guest access to Wi-Fi?

yes: 40%

no: 49%

N/A: 11%

19) Is your network and server layout well-documented?

yes: 57%

no: 43%

20) Are you the lone system administrator at your workplace?

yes: 46%

no: 54%

21) Do you have to support platforms other than Linux?

yes: 71%

no: 29%

22) Have you ever had a system compromised?

yes: 37%

no: 63%

23) Do you use:

a router/firewall appliance (Cisco, etc.): 62%

a software-based router/firewall solution: 38%

24) Does your husband/wife/significant other know your password(s)?

yes: 7%

no: 93%

25) Do you use a password program like LastPass or KeePassX?

yes: 37%

no: 63%

26) How often do you change your passwords?

daily: 1%

weekly: 3%

monthly: 19%

quarterly: 31%

rarely: 46%

27) Do you force your users to change their passwords?

yes: 50%

no: 50%

One of the R statistics program's great features is its modular nature. As people develop new functionality, R is designed so that it's relatively easy to package up the new functionality and share it with other R users. In fact, there is an entire repository of such packages, offering all sorts of goodies for your statistical needs. In this article, I look at how to find out what libraries already are installed, how to install new ones and how to keep them up to date. Then, I finish with a quick look at how to create your own.

The first step is to take a look and see what libraries already are installed on your system (Figure 1). You can do this by running library() from within R. This provides a list of all the libraries installed in the various locations visible to R. If you find the library you're interested in, your work is almost done.

In order to make R load the library of interest into your workspace, you need to call library with the name of the library in the brackets. Let's say you want to do parallel code with the multicore library. You would call library("multicore").

If you want to learn more about a library, R includes a help system that is modeled after the man page system used in Linux. There are two ways to access it. The first is to use the help() command. So in this case, you would run help("multicore") (Figure 2). The shortest way to get help is to use the special character ?. For example, you could type ?multicore to get the same result. A related command that is good to know is ??. It does a search through the library names and descriptions based on the text given. For example, ??plot pulls up entries related to the word plot (Figure 3).

But, what if the library you are interested in isn't already on your system? Then you need to install it somehow. Luckily, R has a full package management system built in. Installing a package is as easy as running install.packages(), where you hand in a list of package names. But, how do you know what packages are available for installation? The R project has a full repository of packages ready for you to use. You can find them at cran.r-project.org. On the left-hand menu, you will see an entry called “Packages”, which will bring you to list of packages. You can search alphabetically by name or by category.

Say you're interested in doing linear programming. On CRAN, you will find a package called linprog, which you can install with the command install.packages("linprog"). When you first run this command, it should come back with an error (Figure 4). By default, R tries to install packages into the system library location. But, unless you are running as root (and you aren't doing that, right?), you won't have the proper permissions to do so. Therefore, R will ask if you want to install the new package into a personal library storage location in your home directory. After you agree to this, it will go ahead and try to download the source for this package. If this is the first time you have installed a package, R will ask you to select a CRAN mirror for downloading the package. This mirror will be used for all future downloads. By default, R also will download and install any dependencies the requested package needs. So in this sense, it really is a proper package management system.

For many packages, all that is involved is strictly R code. But in some cases, the author may have written part of the code in some other language, like C or FORTRAN, and wrapped it in R code. In those types of packages, the other code needs to be compiled into binary code before it can be used. How can you do that? Well, the R package system actually can handle compiling external code as part of the installation process. In some cases, this external code may need other third-party libraries in order to be compiled. To hand in locations for those, you need to add some options to the install.packages function call. Checking the help page (with ?install.packages) shows that you can include installation options as INSTALL_opts.

Now that you have your collection of packages all installed and configured on your system, what do you do if a bug gets fixed in one of them? Or, what happens if a new version comes out with a better algorithm? Well, R's package management system can handle this rather well. You can check to see whether any packages need to be updated by running packageStatus() (Figure 5). If you see that updates are available, you can install the updates by using the command update.packages(). This command goes through each available update and asks you whether you want to install the new version.

Many packages include either demos, data files or both. The demos walk you through some examples of how to use the functions provided by the package in question. To see what demos are available, you can call demo() (Figure 6). To run a particular demo, for example the nlm demo, you would run demo(nlm).

Many packages also include sample data files that you can use when you are learning to use the new functions. To see what data files are available, you would call data() (Figure 7). To load a particular data file, you need to call data with the data file you are interested in. For example, if you want to play with water levels in Lake Huron, you would call data(LakeHuron). You can get more information on the data, including a description and a list of the variables available, by running ?LakeHuron (Figure 8).

So far, I've been looking at dealing with individual packages, but sometimes you need functions provided by several different packages. In R parlance, this is called task views. These are groups of packages that are all useful for a particular area of research. If you are interested in using task views, start by installing the ctv package. In R, run install.packages("ctv") to install the main task view package.

Once that's done, you can load the library with library("ctv"). Now, you will have new functions included in the install and update packages. To install a view, like the Graphics view, you simply can run install.views("Graphics"). You can update these views as a whole with the command update.views(). These task views, like all of the packages, are written and maintained by other users like yourself. So, if you have some area of research that isn't being served right now, you can step in and organize a new view yourself.

Up to this point, I've been discussing how to use packages that have been written and provided by other people. But, if you are doing original research, you may end up developing totally new techniques and algorithms. Science and knowledge advance when we share with others, so R tries to make it easy to create your own packages and share them with others through CRAN. There is a fixed directory layout where you can put all of your code. You also need to include a file called “DESCRIPTION”, and a writeup of your package. An example of this file looks like:

Package: pkgname Version: 0.5-1 Date: 2011-01-01 Title: My first package

Author@R: c(person("Joe", "Developer", email = "me@dot.com"),

person("A.", "User", role="ctb", email="you@dot.com"))

Author: Joe Developer <me@dot.com>, with contributions from A.

User <you@dot.com>

Maintainer: Joe Developer <me@dot.com> Depends: R (>= 1.8.0), nlme

Suggests: MASS Description: A short (one paragraph) description License:

GPL (>= 2) URL: http://www.r-project.org, http://www.somesite.com

BugReports: http://bugtracker.com

Once you have all of your code and data files written and packaged, you can go ahead and run a check on your new package by running the command R CMD check /path/to/package on the command line. This runs through some standard checks to make sure everything is where R expects things. Once your package passes the checks, you can run R CMD build /path/to/package to see if R can build your package properly. This is especially important if you have external code in another programming language. Once your package passes the checks and builds correctly, you can bundle it up as a tarball and send it up to CRAN.R-project.org/incoming as anonymous, and then send an e-mail to CRAN@R-project.org. Once your package has been checked by someone at CRAN to verify that it builds correctly, your newly created package will be added to the repository. Fame and fortune will be soon to follow.

Hopefully this article has provided enough information to help you get even more work done in R. And remember, we all progress when we share, so don't hesitate to add to the functionality available to the whole community.

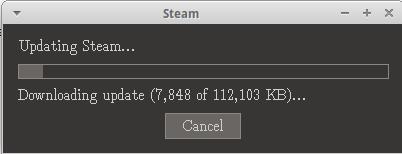

After months of me promising Steam would be coming to Linux, the public beta is finally here. The early verdict: it's pretty great! The installer is a simple pre-packaged .deb file for Ubuntu (or Xubuntu in my case), and the user portion of the install looks very much like Windows or Macintosh. In my limited testing, I've found the Steam beta to be at least as stable as Desura. I also was impressed with the large number of my Steam games that have Linux versions ready to download and play.

If you were under the impression that Steam was going to be the next Duke Nukem Forever, I'm happy to say that you (and I) were wrong. Steam is finally coming to Linux, which has the potential to change the way Linux users play games. It also means fewer reboots into Windows just to shoot a few zombies! Check it out at www.steamforlinux.com.

It does not do to leave a live dragon out of your calculations, if you live near him.

—J. R. R. Tolkien, The Hobbit

A goal without a plan is just a wish.

—Antoine de Saint-Exupéry

In preparing for battle I have always found that plans are useless, but planning is indispensable.

—Dwight D. Eisenhower

Someone's sitting in the shade today because someone planted a tree a long time ago.

—Warren Buffett

Everybody has a plan until they get punched in the face.

—Mike Tyson