A chat with Matt Bunting, developer of the hexapod robot.

Linux is ubiquitous these days. It powers large-scale HPC systems and small embedded devices—from space to underground. And although it's quite easy (to some degree) to build an unmanned device like a rover or to run a helicopter with Linux, it still is a tough job to create a robot that behaves like a biological object. Fortunately, there's a will and there's a way—and there's Linux. The latter became the core of the hexapod—the robotics device that resembles a spider, developed by Matt Bunting.

Matt Bunting

AB: Matt, you're the creator of the hexapod robot that runs on Linux. But before talking about the robot, tell us about your Linux experience and the role Linux plays in your life.

MB: The field of robotics is what introduced me to the world of Linux. I had previously done basic development in UNIX, so the transition of switching my OS to Linux was seamless. Currently, I use Linux for most things involving development, such as robotics projects and tinkering around with OpenCV. I have a couple Atom-based Linux computers at my house running a custom Webcam-based security system.

AB: What is the hexapod in general, and what ideas were behind its design?

MB: A basic hexapod is a six-legged platform. Each leg can be configured in any number of ways. One such way is a Stewart platform. The version I made is a portable platform (body) with non-rigid feet. Each foot in a portable platform may have any number of degrees of freedom (DOF). Some of the simplest hexapods have three total degrees of freedom, but these are constrained to very simple motions like moving only forward or backward. For incredible flexibility of the platform, three DOF (18 total) are needed per leg to position each “foot” at nearly any given (x,y,z) coordinate. This allows the hexapod to have complete flexibility of the body, so the body may translate or tilt in any direction, in any combination. Recently, there has been an interest in four degrees of freedom per leg. This does not increase the amount of body control, but it can extend the range. I wanted to design the 18 DOF for the best flexibility of the body to use the hexapod for various applications, from implementation of neural networks (NNs) to terrain adaptation methods.

AB: And you have succeeded in this design—a spider with 18 DOF, right?

MB: I would certainly say so. Currently, it is a very solid design that can take a quite a bit of abuse. Though it is fully functional, I'm always looking for ways to upgrade it. I have some ideas floating around, but haven't seriously integrated anything yet.

AB: Can someone achieve these goals with another kind of a robot, say with a rover?

MB: Since my goal was to research legged locomotion techniques, a wheeled rover doesn't make sense for what I want to do in robotics. Sometimes I am asked what the practical applications of a hexapod are, but for many cases, a hexapod is impractical. Mine is terribly power-inefficient, and the learning techniques I have used take too long to be effective for anything practical, like sending a hexapod on a space mission or to fix an underwater bursted pipe.

The legged aspect and complexity of a hexapod is interesting in the area of machine learning because the complexity makes it difficult to learn efficiently. Wheeled robots may learn locomotion very quickly, which does not generalize the effectiveness of the algorithms involved. One aspect of machine learning I was interested in included the ability of the machine to re-learn after damage to the robot. For example, if the robot learned to walk, began walking in a dangerous environment and a rock were to crush two legs, then the algorithms implemented could sense that the previously used motions are not very effective, and it could begin to learn an optimal walking policy based on the new configuration.

AB: Describe how you “teach” a hexapod to walk. Previously, you mentioned NNs and adaptation methods. Do you load some basic behavior functions into software, and later the spider tries to find the optimal walk for every kind of a terrain, or is there another approach?

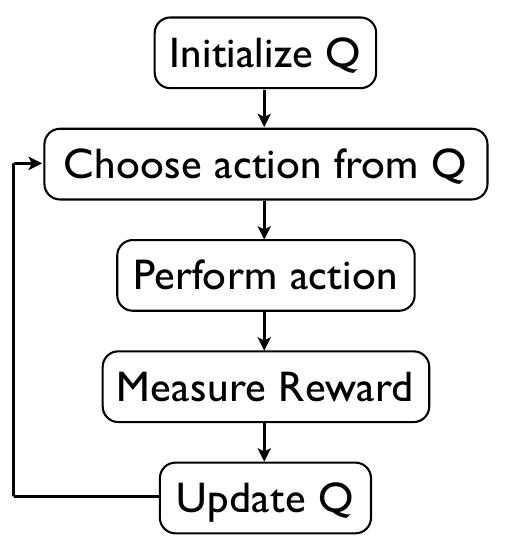

MB: I have, so far, explored two different learning mechanisms, both of which learn in very different ways. The first was a reinforcement learning technique called Q-Learning with Softmax action selection. Basically, the hexapod would experiment between different motor states. Starting from one state, the algorithm applies Softmax to the current Q-matrix for action selection probability and chooses one based on a random number generator. The values in the Q-matrix with higher values (good transitions to move forward) are more likely to be selected, while others (poor transitions, like moving backward) are not as likely to be selected. Once an action is selected, the hexapod first takes an image using a Webcam pointing in the forward direction, then performs the action. The action moves the hexapod into a new state. In the new state, another image is taken. The state transition reward is measured by the difference between the two images.

I used a function in OpenCV to measure optic flow. The resulting vector field from the optic flow function is run through some simple math to determine how the hexapod moved based on the overall directionality of the vectors. For example, if the resulting image had a sense of “zooming in” from moving the hexapod forward, all the vectors would point outward from the center of the image. This translates to a high reward. If the vectors are pointing toward the center, the hexapod moved backward, resulting in a cost or negative reward. I also included twisting of the body and translational movements of the camera as negative rewards because the goal was to get it to walk forward very smoothly.

The reward value is used to update the Q-Matrix. Based on the transition reward and possible future rewards from the new state, the Q-value is updated. Softmax action selection gives a nice balance between explorative and exploitative behavior. Once a gait is well learned, there is a small probability that the hexapod will explore other state transitions. If, however, the hexapod were to break a leg and apply a previously learned method, the reward values would decrease tremendously. The newly updated Q-matrix would result in a low probability of exploring the previously learned transitions and begin to experiment with others.

Q-Learning Technique Algorithm

The NN is very much different. I based a good portion of the network on Randall Beer's work on a neural-network-based hexapod. The neurons I used were different, more biologically influenced. I believe that Beer used continuous neurons, which were differentiable, but the ones I used were integrated and fired with adaptation. They don't truly mimic biology, but they are much closer than continuous-based neurons. I constructed something similar to Beer's network, but I have to add additional neurons to move the legs around. The network is based around an oscillator called a Central Pattern Generator (CPG), also called a Pacemaker Neuron. These are created by mutually inhibiting two neurons. One begins to fire, preventing the other from firing, but slows down due to adaptation, and eventually the other neuron will begin to fire, inhibiting the first one, and so on. These CPGs are used to drive other neurons that operate the legs.

To learn the weights for each connection correctly, a genetic algorithm was used to train the weights. I used a population size of 16, and the fitness function was based on running the hexapod for 10 seconds and measuring the traveled distance and resulting direction angle. The closest the resulting angle to the starting orientation and the farthest distance traveled resulted in the best fitness value. The hexapod learned to walk very effectively after 100 generations.

AB: You mean 100 iterations of the learning, or 100 steps here?

MB: In genetic algorithms, this is the number of generations, which can be thought of as iterations in a way. In each generation, a new population size of 16 is created.

AB: Several sources say that the robot was assembled from trash parts. Is that true?

MB: This is a bit misinterpreted by many people, because they think that the videos I posted on YouTube are where I am at now, not where I was when I started. The hexapod began as a project for a class, and I had limited money, time and resources. I (like any hobbyist, maker or hacker) have a drawer full of parts I've been collecting for years. I started this drawer when I was nine years old, and my mentor at the time called it the “things-I-don't-know-what-they-are-but-they-may-be-useful-someday drawer”. So when the time came for the class project, I searched through all the servo motors, breadboards and metal brackets I had and threw together a hexapod of mismatched legs to implement the reinforcement technique called Q-learning. This was not the hexapod I put on YouTube.

The professor of the class, Dr Tony Lewis, really liked the project and was looking to hire an undergrad into his lab. I also needed a job so he hired me into his lab, the Robotics and Neural Systems Laboratory (RNSL). At RNSL, there is a Dimension 3-D printer, so I took advantage of it and saved up my money to buy matching motors. I gave the hexapod a complete makeover (including a new Atom-based fit-PC2) with no junk parts, and then I posted a video on YouTube. This is the video that Stewart Christie of Intel saw. Also, the video shows no learning at all. It was just a demonstration to forum members at hexapodrobot.com/forum of my platform in action.

AB: Linux is the key to success for the hexapod. Why did you decide to use Linux, and what particular distribution was the final choice?

MB: I wanted ultimate onboard computational power for vision processing, which led to fit-PC2 from CompuLab. At RNSL, Ubuntu is the distribution of choice and is used for everything from simulations to direct robot operation. We use Linux because of the flexibility and the available resources.

AB: The main hardware unit, an Atom motherboard, was donated by Intel. Could you shed some light on your cooperation with Intel?

MB: Originally, I had purchased the computer before any cooperation with Intel. At the time of searching for an embedded solution, I was looking into Gumstix boards and Via processor-based boards. These were great for kinematics integration, but for heavy computation of vision, I needed something even more powerful. Fortunately at the time, CompuLab had just released its fit-PC2, which is the ideal computer for robotics. Once I posted the video, a couple days later, Stewart from Intel wrote a comment on my YouTube video regarding interest in the hexapod. I contacted him, and after a month or so, he said that Intel would want me to create two hexapods, one for tradeshows and the other for me, but the Intel folks can borrow mine if they have multiple demonstrations at the same time.

AB: Speaking practically, the hexapod is actually a high-tech model, because the use of 3-D Stratasys printers won't be available for casual amateurs. You've made a model—that is, a framework and legs in CAD software—printed it and attached servos and a camera to the body and that's it. Am I missing something?

MB: 3-D printing has been becoming incredibly cheap, where hobbyists can make their own home 3-D printer for around $1,000. The quality is not anywhere near what the Stratasys machine can do that I use, but functional parts still may be created. My hexapod is not very different functionally from other hexapods that can be put together with off-the-shelf components, but since I had the Stratasys machine at my disposal, I took full advantage of it and tried to make complex, curved shapes. I actually used SolidWorks for the mechanical design, because it is freely offered by my university, but I will have to look into QCad.

AB: The robot without its middleware is just another embedded Linux device. Did you use a specific software for your “spider”, or did you write software for it?

MB: I developed all the software for the hexapod in C++, using OpenCV libraries for simulations and vision processing.

AB: Can you estimate how much time (in hours) it took to construct the hexapod (put together the mechanical and electrical parts) and write the software itself?

MB: Ha ha. It is an enormous amount for sure. I worked on it for hours a day, weeks at a time. The original Q-Learning robot probably took 200 hours. Then I gave it a makeover, which took maybe 100 hours, and then the other projects took maybe 80 each.

AB: You said previously that the operating system beneath the cover is Ubuntu. What IDE did you use to write the robot's middleware—Qt, WxWidgets, ncurses or something else?

MB: I actually use only GEdit and purely in C++ using OpenCV libraries. The simulations I built were done purely using OpenCV, drawing individual lines at a time. I feel like I re-invent the wheel when I do this, but I sure learn a lot!

AB: Do you release schematics and auxiliary software of a robot under the GPL or under another license?

MB: I have been looking to release my kinematics code under a license, but I know nothing in this area. Maybe LJ readers could point me in the right direction?

AB: You're a senior student at the University of Arizona, and this project is to some degree your graduation work. Do you plan to continue its future development?

MB: I look forward to implementing more functionality into the hexapod as a graduate student at the University of Arizona. Currently, I am working on a miniature version. I do want to explore the link between vision and legged locomotion through the use of biologically inspired neural networks further.

AB: Where, in your opinion, can this “spider” be used and be useful for people?

MB: The hexapod could make a great search-and-rescue-style robot. In a natural disaster like an earthquake, it would be desirable to have a large number of robots searching autonomously for survivors in a building turned to rubble. The environment is treacherous though and could cause damage to the robot. Once damage is inflicted upon the hexapod, a purely inverse kinematic-based hexapod would be rendered useless. If, however, it could re-learn, the hexapod still could operate given its new configuration. Like I mentioned previously though, it is more of a tool to explore machine learning techniques and out of the research, hopefully discover faster algorithms. Hopefully, better algorithms can be used for consumer-based robotics to increase the standard.

AB: Do you plan to use the hexapod or your next prototype CrustCrawler in autonomous walking research?

MB: Sure. The learning mechanisms I've implemented are more scientific, and I certainly encourage everyone to explore science with robotics platforms, but it is not necessarily the goal for the CrustCrawler platform. I see that as a means of making it easy for anyone to get started in the field of hexapod robotics. If the different geometry is more conducive to what I want the hexapod to do, then I will, of course, use it. The hexapods are simply tools in my mind for research. I certainly want my hexapods to be able to roam around on their own and accomplish some task even if it is mundane. The nice thing about the CrustCrawler hexapod and my personal hexapod is that they can be easily interchanged, as they use motors that communicate using identical protocols. All I have to do is change a few geometric calibration settings and it will run just fine.