IBM and Los Alamos National Lab built Roadrunner, the world's fastest supercomputer. It not only reached a petaflop, it beat that by more than 10%. This is the story behind Roadrunner.

In 1995, the French threw the world into an uproar. Their testing of a nuclear device on Mururoa Atoll in the South Pacific unleashed protests, diplomatic friction and a boycott of French restaurants worldwide. Thanks to many developments—among them Linux, hardware and software advances and many smart people—physical testing has become obsolete, and French food is back on the menu. These developments are manifested in Roadrunner, currently the world's fastest supercomputer. Created by IBM and the Los Alamos National Laboratory (LANL), Roadrunner models precise nuclear explosions and other aspects of our country's aging nuclear arsenal.

Although modeling nuclear explosions is necessary and interesting to some, the truly juicy characteristic of the aptly named Roadrunner is its speed. In May 2008, Roadrunner accomplished the almost unbelievable—it operated at a petaflop. I'll save you the Wikipedia look-up on this one: a petaflop is one quadrillion (that's one thousand trillion) floating-point operations per second. That's more than double the speed of the long-reigning performance champion, IBM's 478.2-teraflop Blue Gene/L system at Lawrence Livermore National Lab.

Besides the petaflop achievement, the story behind Roadrunner is equally incredible in many ways. Elements such as Roadrunner's hybrid Cell-Opteron architecture, its applications, its Linux and open-source foundation, its efficiency, as well as the logistics of unifying these parts into one speedy unit, make for a great story. This being Linux Journal's High-Performance Computing issue, it seems only fitting to tell the story behind the Roadrunner supercomputer here.

“You want to talk about challenges; it is the logistics of dealing with this many pounds of stuff”, said Don Grice, IBM's lead engineer for Roadrunner. “The folks in logistics have it down to a science.” By the time you read this, the last of Roadrunner's 17 sections with 180 compute nodes—250 tons of “stuff” on 21 semitrucks—will have left IBM's Poughkeepsie, New York, facility, bound for Los Alamos National Laboratory's Nicholas Metropolis Center in New Mexico.

Figure 2. Early Bird's-Eye View of Roadrunner (Phase 1 Test Version) in Spring 2007 at Los Alamos National Lab

The petaflop accomplishment occurred at “IBM's place”, where the machine was constructed, tested and benchmarked. In reality, Roadrunner achieved 1.026 petaflops—merely 26 trillion additional floating-point calculations per second beyond the petaflop mark. Roadrunner's computing power is equivalent to 100,000 of today's fastest laptops.

The Roadrunner is one of the most complex projects undertaken by both IBM and its partners. IBM produced each of Roadrunner's two server blades in two different locations and assembled them into so-called tri-blades in a third. The tri-blades then were shipped to Poughkeepsie to become part of Roadrunner. Despite this logistical hurdle, the project was completed on schedule and at budget.

IBM also had to find partners for the entire interconnect fabric, make it scale and obtain the desired performance. The company also worked with various Linux and other open-source communities to build a coherent software stack. Fears that the high level of coordination among partners, such as Emcore, Flextronics, Mellanox and Voltaire, wouldn't work out were proved unfounded. “They all pulled together in a tremendous, tremendous way”, said Grice. “There isn't any aspect of the machine that isn't doing what it was supposed to.”

Of course, a project of Roadrunner's magnitude requires many smart people at both IBM and LANL, who have collaborated for six years to develop and build it. The team at LANL consisted of 171 people, with a group of similar size on the IBM side. “Los Alamos and IBM have formed a very close partnership”, commented Andy White, LANL's Project leader. “We have been able to work together to work though many problems”, he added.

According to White, the project planning began in 2002, when LANL decided to pursue supercomputers with accelerators (in the end, the Cell processors) to achieve its modeling needs. They had begun hearing about the Cell processor and were intrigued about the potential for its applications. LANL determined that it essentially wanted a very large Linux cluster and realized that with the accelerators, they could reach the petaflop.

IBM and LANL jointly worked on Roadrunner's overall design; IBM implemented the code—that is, the computational library (ALF) and the arithmetic software (DaCS) for hybrid systems. The Los Alamos group was tasked with ensuring that its applications would run on the machine. The system modeling group spent an entire year analyzing its applications and the performance characteristics of the machine, making sure that both LANL's classified work and all kinds of interesting science applications would work well. The group built four applications related to its nuclear-physics modeling. These include the Implicit Monte Carlo (IMC) code (the Milagro application suite) for simulation of thermal radiation propagation, the Sweep3D kernel, the SpaSM molecular dynamics code and the VPIC particle and cell plasma physics code. LANL's White says that these applications were the basis for asking the question “Can we program [Roadrunner] and can we get accelerated performance on this system?”

There were no shortage of significant intellectual challenges to making Roadrunner do its job. One was to prove that the aforementioned applications could run on an accelerated Roadrunner—without having it to run on! In September 2006, IBM delivered a base system to LANL for testing, but without accelerators. The applications could be tested on the Cell processor but not on the complete node or system. White explained:

The performance and architecture laboratory team actually was able to model the entire system [complete with acceleration] and predict pretty much dead-on what has happened when the code was run on the full system. The fact that we were able to pass two serious technical assessments in October [2007] and show people that we can program the machine, the codes can get good speed up, they're accelerated, and we can manage the machine, etcetera, without actually having the machine on hand, I think was a tour de force.

Another challenge involved the networking. While working with the base system in late 2006 and early 2007, the concern arose that Roadrunner's computing horsepower would cause the network to be a bottleneck. Thus, said White, “the nodes were redesigned in-flight”, with the new ones offering a 400% increase in performance from the Opteron to the Cell processors, as well as out to the network, vis-à-vis the original design. All of this was done at the same original contract price.

The $110 million Roadrunner was completed on schedule, just in time to qualify for the June 2008 edition of the Top 500 list of the world's most powerful computer systems.

You may be surprised to learn that Roadrunner was built 100% from commercial parts. The secret formula to its screaming performance involves two key ingredients, namely a new hybrid Cell-Opteron processor architecture and innovative software design. Grice emphasized that Roadrunner “was a large-scale thing, but fundamentally it was about the software”.

Despite that claim, the hardware characteristics remain mind-boggling. Roadrunner is essentially a cluster of clusters of Linux Opteron nodes connected with MPI and a parallel filesystem. It sports 6,562 AMD dual-core Opteron 2210 1.8GHz processors and 12,240 IBM PowerXCell 8i 3.2GHz processors. The Opteron's job is to manage standard processing, such as filesystem I/O; the Cell processors handle mathematically and CPU-intensive tasks. For instance, the Cell's eight vector-engine cores can accomplish acceleration of algorithms, much cooler, faster and cheaper than general-purpose ones. “Most people think [that the Cell processor] is a little bit hard to use and that it's just a game thing”, joked Grice. But, the Cell clearly isn't only for gaming anymore. The Cell processors make each computing node 30 times faster than using Opterons alone.

LANL's White further emphasized the uniqueness of Roadrunner's hybrid architecture, calling it a “hybrid hybrid”, because the Cell processor itself is a hybrid. This is because the Cell has the PPU (PowerPC) core and eight SPUs. Because the PPU is “of modest performance” as the folks at LANL politely say, they needed a core for running code that wouldn't run on the SPUs and improved performance. Thus, the Cells are connected to the Opteron.

The system also carries 98 terabytes of memory, as well as 10,000 InfiniBand and Gigabit Ethernet connections that require 55 miles of fiber optic cabling. 10GbE is used to connect to the 2 petabyes of external storage. The 278 IBM BladeCenter racks take up 5,200 square feet of space.

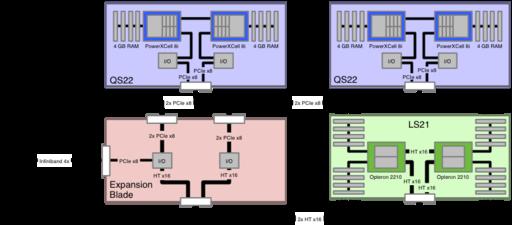

The machine is composed of a unique tri-blade configuration consisting of one two-socket dual-core Opteron LS21 blade and two dual-socket IBM QS22 Cell blade servers. Although the Opteron cores each are connected to a Cell chip via a dedicated PCIe link, the node-to-node communication is via InfiniBand. Each of the 3,456 tri-blades can perform at 400 Gigaflops (400 billion operations per second).

See Figure 3 for a schematic diagram of the tri-blade.

Figure 3. The hybrid Opteron-Cell architecture is manifested in a tri-blade setup. The tri-blade allows the Opteron to perform standard processing while the Cell performs mathematically and CPU-intensive tasks.

The hybrid, tri-blade architecture has allowed for a quantum leap in the performance while utilizing the same amount of space as previous generations of supercomputers. Roadrunner takes up the same space and costs the same to operate as its two predecessors, the ASC Purple and ASC White machines before it. This is because performance continues to grow predictably at a rate of 1,000% every 10–11 years. Grice noted how just three of Roadrunner's tri-blades have the same power as the fastest computer from 1998. Put another way, a calculation that would take a week on Roadrunner today would be only half finished on an old 1 teraflop machine that was started in 1998.

Such quantum leaps in performance help boggle the minds of many scientists, who see their careers changing right before their eyes. If they have calculations that take too long today, they can be quite sure that in two years, the calculation will take one-tenth of the time.

Neither IBM's Grice nor LANL's White could emphasize enough the importance and complexity of the software that allows for exploitation of Roadrunner's hardware prowess. Because clock frequency and chip power have plateaued, Moore's Law will continue to hold through other means, such as with Roadrunner's hybrid architecture.

Roadrunner was put together in its full configuration on May 23, 2008. On May 26, it reached the petaflop. “Running a petaflop just three days after being assembled is pretty amazing”, said White.

Clearly a petaflop isn't the limit. Not only was the original petaflop achievement actually 1.026 petaflops, since then, Roadrunner has done better. In June 2008, LANL and IBM ran a project called PetaVision Synthetic Cognition, a model of the brain's visual cortex that mimicked more than one billion brain cells and trillions of synapses. It reached the 1.144 petaflop mark. Calculations like these are the petaflop-level tasks for which Roadrunner is ideal.

“It's hard to overstate how exciting it is to see the science we'll be able to do with Roadrunner”, said White. In mid-2009 the bulk of Roadrunner's nodes will enter “classified” mode for the rest of its life, allowing only authorized personnel to know what it's doing. Nevertheless, scientists and their groupies will be happy to learn about some of Roadrunner's non-military duties. First, in August 2008, LANL ordered two additional connected units for Roadrunner, dubbed the Turquoise Network, which will be available and “in the open all the time”, according to White. These units should be running by October 2008. In addition, during early 2009 before Roadrunner goes classified, LANL will utilize several other so-called unclassified open science codes as test loads as part of Roadrunner's stabilization and integration process. The ten codes that have been selected for this purpose must prove their ability to work on Roadrunner. Although some of these codes are based on the above-mentioned VPIC and SPaSM, others are new and untested. “It remains to be seen whether others can write codes that actually will run on the system”, stated White.

LANL received 29 proposals for access to Roadrunner, of which two were weapons-related and eight were non-weapons-related. A sampling of the fascinating selected projects include investigations of the formation of metallic nanowires with an atomic-force microscope, the phylogenetics of the early infection states of HIV and, finally, dark energy and matter.

Although the chance to utilize Roadrunner's power is enticing, one must consider the extra tweaking to take advantage of the hybrid architecture. “It can be tricky”, said White. With a more conventional machine, codes don't require much change from one Linux cluster to another. Fortunately, for those scientists whose proposals have been accepted, LANL is offering extra funds to support code development to the hybrid architecture. This December, LANL will evaluate the progress of each project and allocate compute time in early 2009 based on those results.

No, I didn't forget to mention that Roadrunner runs on Linux—Red Hat to be exact. From the beginning, the LANL team knew it wanted Linux due to the open nature of its mission and what it sought to accomplish. IBM's Grice added that LANL “has always been interested in Linux things, so it was a natural fit. We did think about [other operating systems] but we didn't think very hard.”

Technically, Linux was a good fit too. The teams didn't need to concern themselves about running either the Cell processor or the LS21 blade server, nor is scalability a major issue, as it didn't come down at the node level. Rather, it is about using all of the nodes together, which means a low level of strain on the operating system. IBM's Linux Technology Center was instrumental in making Linux work on the Roadrunner.

Beyond Linux, Grice praised other open-source communities for their “tremendous cooperation”. He explained how they excitedly dived into the unique challenges presented by Roadrunner and its hybrid architecture and surpassed all expectations. Some of the open-source applications include the Moab scheduler and Torque resource manager.

To the surprise of IBM and LANL, most potential software “issues” never turned into problems. However, one challenge presented by open source is the numerous streams that aren't always compatible with each other. Thus, the teams had to hold themselves back in some places and experiment in others to keep a stack that was coherent with itself. Nevertheless, the result was satisfying and scaled effectively.

“The notion that there were separate communities who all pulled together, and then it all locked in together as one whole stack, that I think is a fantastic story”, said Grice.

In general, “power and cooling are second only to the software complexity”, emphasized Grice. Power is the real problem for driving HPC forward. Roadrunner solves these issues through the efficiency of its design. Especially due to the efficiency of the Cell processors, Roadrunner needs only 2.3MW of power at full load running Linpack, delivering a world-leading 437 million calculations per Watt. This result was much better than IBM's official rating of 3.9MW at full load. Such efficiency has placed Roadrunner in third place on the Green 500 list of most efficient supercomputers.

Otherwise, Roadrunner is air-cooled, utilizing large copper heat sinks and variable-speed fans.

Despite Roadrunner's quantum leap into petascale computing, it is merely the beginning of an exciting trend. IBM's Grice spoke of efforts in Europe to re-invigorate supercomputing there, with plans in the pipeline for multi-petaflop machines on-line by 2010. IBM also is planning in the tens of petaflops with Los Alamos and Sandia National Laboratories, including a 50-petaflop machine slated for delivery in 2012 or 2013. “We're going to have an exaflop in 11 years”, adds Grice, “so we just have to figure out how to power it”. The trend has been amazingly linear, and given the advances in hybrid computing, it likely will continue unabated.

Roadrunner also will raise expectations, and hybrid computing will trickle down, making the once-impossible possible. Climate-change scientists will heap more elements to their models, pharmaceutical companies will model the effects of drugs in the body, and Hollywood's special-effects will become even more mind-blowing.

As this future unfolds, the Roadrunner teams at IBM and Los Alamos National Lab should be confident in their accomplishment of building the world's fastest supercomputer—the first-ever petaflop machine. It was an incredible achievement in planning, hardware, software and logistics that has set the global standard for supercomputing. It will be interesting to see what the team will accomplish next.