Some of the world's most widely spoken languages are the hardest to support on a computer. Here's how support is coming together for Hindi, Malayalam and other languages of the Indian subcontinent.

South Asia, home to nearly one-sixth of humanity, is struggling to attain regional language solutions that would make computing accessible to everyone. Even if most are poor and have low purchasing ability, this could open the floodgate to greater computing power and much-needed efficiency in a critical area of the globe. However, some call Indic and other South Asian scripts the final challenge for full-i18n support.

Some Indian regional languages are larger than those spoken by whole countries elsewhere. Hindi, with 366 million speakers, is second only to Mandarin Chinese. Telugu has 69 million; Marathi, 68 million; and Tamil, 66 million. Sixteen of the top 70 global languages are Indian languages with more than 10 million speakers. Other languages spoken in India are also spoken elsewhere. Bengali has 207 million speakers in India and Bangladesh, and Urdu has 60 million in Pakistan and India.

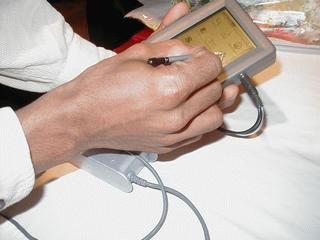

The Simputer is a simple and relatively inexpensive Linux computer for people in Indian villages. The creation of the Simputer is being organized with a hardware license, the Simputer General Public License, modeled on the GPL. Although the license provides for free publication of specifications, it does require a one-time royalty payment before licensees sell Simputers.

The Simputer features a 200MHz StrongARM processor, 32MB of DRAM, 24MB of Flash storage, a monochrome display, speaker and microphone.

dhvani is a text-to-speech system for Indian languages developed by the Simputer Trust developers and others. It is promising to have a better phonetic engine, Java port and language-independent framework soon. (See sourceforge.net/projects/dhvani.) Meanwhile, IMLI is a browser created by the Simputer Trust for the IML markup language. It is designed for easy creation of Indian language content and is integrated with the text-to-speech engine.

In Kerala, a southern state with an impressive 90% literacy rate whose language Malayalam is spoken by 35 million people, senior local government official Ajay Kumar (kumarajay1111@yahoo.com) is leading an initiative to make GNU/Linux Malayalam-friendly: “We propose to develop a renderer for our language. Specifically, we are looking for a renderer for Pango (the generic engine used with the GTK toolkit).”

He adds, that in nine months time, “we want to create an atmosphere where language computing in Malayalam improves.” He also says, “We are confident that once we deliver the basic framework, others will start localizing more applications in Malayalam.”

At the toolkit level, GTK and Qt are the most used. GTK already has a good framework through the Pango Project and has basic support for Indian languages. Qt also now has Unicode support for all languages, but rendering is not yet ready.

International efforts also are helping India. Yudit, the free Unicode text editor, now offers support for three South Indian languages: Malayalam, Kannada and Telugu. Delhi-based GNU/Linux veteran Raj Mathur commented, “The current version of Yudit has complete support for Malayalam and other Indic languages. It can also use OpenType layout tables of Malayalam fonts. I think Yudit is the first application that can use OpenType tables for Malayalam.”

K Ratheesh was a student of the Indian Institute of Technology-Madras (at the South Indian town of Chennai) when he worked on enabling the GNU/Linux console for local languages a couple years ago. He said:

As the [then] current PSF format didn't support variable width fonts, I have made a patch in the console driver so that it will load a user-defined multiglyph mapping table so that multiple glyphs can be displayed for a single character code. All editing operations also will be taken care of.

In Indian languages, there are various consonant/vowel modifiers that result in complex character clusters. “So I have extended the patch to load user-defined, context-sensitive parse rules for glyphs and character codes as well. Again, all editing operations will behave according to the parse rule specifications”, Ratheesh commented.

Ratheesh also said, “Even though the patch has been developed keeping Indian languages in mind, I feel it will be applicable to many other languages (such as Chinese) that require wider fonts on console or user-defined parsing at I/O level.”

The package, containing the patch, some documentation, utilities and sample files then weighed in at around 100KB.

Many issues need to be tackled in the search for solutions. For instance, which languages need be tackled first? Joseph Koshy (JKoshy@FreeBSD.ORG), HP's Bangalore-based technical consultant, argues that the North Indian Hindi family promises the greatest reach population-wise. However, he feels the southern languages—Kannada, Telugu, Tamil and Malayalam—offer the greatest promise of real-world deployability. They enjoy a better support infrastructure needed to deploy an effective IT solution, which appears to be better in South India.

Outside of his work life at HP, Koshy is a volunteer developer of the FreeBSD operating system and one of the founders of the Indic-Computing Project on SourceForge. Koshy says:

What I am interested in is helping make standards-based, interoperable computing for Indian languages a reality. This dream is bigger than any one operating system or any one computing platform. I want to see pagers, telephones, PDAs and other devices that have not been invented yet interacting with our people in our native languages.

But others have different views. CV Radhakrishnan (cvr@river-valley.org), a TeX programmer who has contributed packages to the Comprehensive TeX Archive Network and runs River Valley Technologies in South India, says:

I think most of the South Indian languages could pose problems [because of] their nonlinear nature. For example, to create conjunct glyphs one has to go back and forth, while North Indian languages do not have this problem. Malayalam has peculiar characters called half consonants [“chillu”]. There is no equivalent for this in other languages. This raises severe computing/programming challenges.

According to Koshy, out of the 18 more important “scheduled” national languages, all except the Devanagari-based ones (which use the same script as Hindi) have serious issues when it comes to representing and processing them on a computer.

Proprietary OSes have trouble with Indian language support too, says Edward Cherlin (edward@webforhumans.com) who creates multilingual web sites: “Progress is slow at Microsoft and Apple. Linux should pass them by the end of the year, or early in 2003.”

On GNU/Linux, Cherlin points out, you can volunteer to Indicize any application. In the future, when font management and rendering are standardized, all applications will run in Indian languages for input and output without further ado, and anyone will be able to create a localization file to customize the user interface. According to Cherlin, volunteers also are needed to translate documentation.

Additionally, Cherlin says, “Apple and Microsoft are not willing simply to support typing, display and printing. They will not release language and writing system support until they have complete locales built, preferably including a dictionary and spell checker.” Linux is under no such constraints.

He points out that the Free Standards Group together with Li18nux.org are proposing to rationalize and simplify i18n support under X, including a common rendering engine, shared font paths and other standards that will simplify greatly the business of supporting all writing systems and all languages. Cherlin feels that Yudit and Emacs both support several Indic scripts and could be extended with only moderate effort on the part of a few experts.

Microsoft's Windows XP has Indian language support based on the current Unicode version (3.x) and hence suffers from all the problems of Unicode-based solutions: inability to represent all the characters of some Indian languages and awkwardness in text processing.

“When Microsoft came up with the South Asian edition of MS-Word, the fonts had a lot of problems. Mostly, words were rendered as separate letters with space in between and not combined together as is the case with most Indian languages”, says PicoPeta language technology specialist Kalika Bali. PicoPeta is one of the firms working to create the Simputer.

However, Dr UB Pavanaja (pavanaja@vishvakannada.com), a former scientist now widely noticed for his determined work to push computing in the influential South Indian language of Kannada (www.vishvakannada.com), sees the progress as being “quite remarkable, compared with the scene about two years ago”. Pavanaja says, “Current pricing and product activation of [Windows] XP may become a boon for GNU/Linux.”

Mandrake Linux includes Bengali, Gujarati, Gurmukhi, Hindi Devanagari and Tamil out of the box. That leaves Oriya, Malayalam, Telugu and Kannada yet to be done, along with the Indic-derived Lao, Sinhala, Myanmar and Khmer. Tibetan and Thai are moderately well supported, Cherlin contends.

“Recently, localization efforts are picking up”, agrees Nagarajuna G, scientist/free software advocate in Mumbai. He also notes:

If the government or other funding agencies can spare any amount to bodies like the Free Software Foundation of India and others who are active in the localization initiative, the developers could work with obsession and make this happen very fast. FSFIndia is presently working with the Kerala government to produce Malayalam support for the GNOME desktop.

For desktop-class machines, current font technology (TTF, OpenType, Type 1, etc.) is capable of handling Indic scripts. Availability of quality fonts is another matter, but as Koshy puts it, this is not really a showstopper. Display technology for embedded devices, such as pagers and other small devices, for Indian languages is not well developed.

Languages like Urdu and Sindhi have right-to-left scripts that look similar to Arabic but are, in fact, different, says Prakash Advani who some years back launched the FreeOS.com initiative. Urdu is the main language of Pakistan but is also used in India.

Satish Babu (sb@inapp.com), a free software enthusiast and vice president of InApp, an Indo-US software company dealing with free and open-source solutions, points to other problems, such as collation (sorting) order confusion (oftentimes, there is no unique “natural” collation order, and one has to be adopted through standardization).

There's also the non-availability of dictionaries and thesauri in Indian languages and issues arising out of multiple correct spellings for words; encoding standardization (Unicode) that will, inter alia, facilitate transliteration between Indian languages programme support (database, spreadsheet) for sorting/searching two-byte strings; lack of support of some languages (e.g., Tulu, Konkani, Haryanvi and Bhojpuri), which are the mother tongues for some sections of our population.

Ravikant (ravikant@sarai.net), who taught history at Delhi University before moving to the Language and New Media Project of sarai.net (www.sarai.net), says, “The long-term solution is of course Unicode.” For short-term measures, he suggests working toward developing the existing packages “in a manner that people can use them with freedom from OSes and fonts”. ITRANS and WRITE32, written by Indians settled abroad, are transliteration packages that already do so. The LaTeX-Devnag package is being used and promoted by Mahatma Gandhi International University, Delhi.

Says Prakash Advani (prakash@netcore.co.in) of the FreeOS.com initiative: “There is definitely a market for Indian language computing that exists today, but there is a huge untapped market: 95% of the population doesn't read or write English. If we can provide a low-cost Indian language computer, it will be a killer.”

Advani also says, “The biggest challenge is not technical but a lack of standards. Till Unicode happened, there was a complete lack of standards. Everyone was following their own standards of input, storage and output of data.”

There is a lack of free Indian language fonts. “There are over 5,000 commercial Indian language fonts, but there are probably ten Free (GPL/royalty free) Indian language fonts. This is a serious issue, and more efforts should be made to release free fonts”, Advani adds.

Besides, others point out, fonts are another mess altogether. Most of the current implementations rely on glyph locations to display and store information. For instance, to represent the letter “a”, what is stored is the position of “a” in some particular font used by that package. This is different from normal English where the ASCII standard specifies that to represent “a” the number 65 must be used. No such standard exists for Indian languages, and thus one document written in one application cannot be opened in another. This is also the reason why authors of Indian web pages must specify particular fonts.

Vendors use this situation to lock in their customers to a particular product. This also limits the exchange of e-mail to only situations where both parties have the same web interface or program for using an Indian language e-mail.

TUGIndia, which Raj Mathur represents, has procured a Malayalam font (Keli) from font designer Hashim. The font is currently available at www.linuxense.com/oss/keli.volt.zip. This is the OpenType source for the Malayalam font. You can edit and improve the font tables with Microsoft VOLT. The font tables are released under the GNU GPL, and the glyph shapes can be changed provided that the fonts are redistributed under a different name. Raj works as an engineer at Linuxense Information Systems and leads the Indian TeX users' group's localization project.

G Karunakar, another young developer with a keen interest in this field, says, “There are very few people in India who understand font technology completely, so most available fonts are buggy. Due to a lack of font standard, our fonts are not tagged as Indian language fonts.”

Right now, a general consensus seems to be building on OpenType fonts as the suitable technology for Indian language fonts. There is already a free Devanagari font (“Raghu” by Dr RK Joshi, NCST and used in Indix), a Kannada OpenType from KGP, also for Malayalam, Telugu and Bengali.

The Indian TeX Users Group currently has a project to fund font designers in all the Indian languages who are ready to write fonts and release them under GPL. They've thus secured “Keli” a Malayalam font family in various weights and shapes written by Hashim and released under GPL. “We do hope to get more fonts in other languages to fill in the gaps. We hope to use the savings generated with TUG2002 (which was held in India in September 2002) exclusively for this purpose”, says Radhakrishnan in Thiruvananthapuram.

“A lot of the know-how exists in rare books, which are difficult to get. A lot of research work done by scholars, linguists, typographers, etc., is going untapped, because we don't know of it, or the people who know it,” adds Karunakar.

Shrinath (shrinath@konark.ncst.ernet.in), a senior staff scientist at Mumbai's NCST, which has done some interesting work on this subject, says:

We want Indian language programming to be as simple as programming in English is today. Almost every company has to re-invent the wheel or buy costly solutions from others. In English, the OS supports it. It's a chicken-and-egg problem. If there are applications in Indic, OS vendors will build the fundamental capabilities into the OS, and if the capabilities are built in, there will be more applications.

“English has been the de facto language for software development as well as usage. So there is a long way to go. China is working quickly on that end, and so can we”, argues Girish S, an electronics engineer from the central Indian region of Madhya Pradesh, who set up ApnaJabalpur.com.

There are other needs too, such as dictionaries and spell checkers. Word breaking doesn't operate the same way in Indic scripts as in the Latin alphabet and neither does fine typography, which you don't find in consumer or office applications in any language. Finally, the main challenge is the sheer numbers. India is believed to have 1,652 mother tongues, of which, 33 are spoken by people numbering over a 100,000.

At the time of this writing, a number of key proponents of Indianization are planning to meet in the southern city of Bangalore at the end of September to discuss local language development tools, applications and content. The meeting is planned for 20-25 core participants.

“We also hope that this broad coalition would play a facilitatory role in helping local language groups interact more effectively with international standards processes and forums, such as the Unicode Consortium and W3C”, says Tapan Parikh, one of the organisers.