How tools like SuSE's YaST2, Red Hat's up2date and Debian's apt-get can help you maintain some measure of patch sanity.

Security is a moving target and chasing it can be positively dizzying. One of the most unrelenting and tedious security tasks on powerful systems like Linux is keeping its myriad applications, commands and libraries current. As anyone who subscribes to the BugTraq mailing list can attest, new software vulnerabilities are constantly being discovered, exploited and patched against. But given the hundreds of software packages installed by the typical Linux distribution, how can you ever hope to keep up?

The bad news is, you can't. Even if you had a way of instantly patching against every new vulnerability published on every vulnerability and incident report mailing list, sooner or later you would become the subject of an incident report yourself; some vulnerabilities don't become common knowledge until they've been exploited.

The good news is that since you can't possibly keep up, any progress you do make is a win. If you get cracked via a three-week-old software vulnerability, at least you're more elite than someone who gets cracked via a three-year-old 'sploit. (On the other hand, either way you're cracked.)

In all seriousness, though, from a purely statistical standpoint, fewer unpatched bugs means fewer exploitable vulnerabilities. Despite how thankless and endless the task seems, it is worth trying to keep your Linux software current. And luckily, recent versions of popular Linux distributions include new tools for automating much of this task, including SuSE's YaST2, Red Hat's up2date and Debian's apt-get. (Some of these tools are even secure!)

I'll start with a key piece of advice that I've arrived at after years of skepticism: wherever possible, stick to your distribution's supported packages. To many people, this probably sounds obvious; why build from source if you don't have to? But to some of us old-school types, building from source is as much a habit as a skill. Maybe this is because way back in the early 1990s (you know, back before we had Slashdot or powered flight), there was a lot less software for Linux than there is now, which meant that many of the things we ran we had to build from sources developed on other platforms. Additionally, the whole concept of packaging Linux into distributions was much less mature: there were fewer distributions, and they didn't change nearly as frequently.

(Have you ever had to compile ps from source because your brand-new kernel wasn't backward-compatible with the older version of ps from your distribution? Some of us have. We also had to walk ten miles to school each day through snake-filled swamps, etc.)

But things are different now. Don't get me wrong: I don't mean that we should all be slaves to binary packages. Sometimes you need features that are available in the very latest version of an application but not in your distribution's version of it; sometimes you want a leaner-and-meaner build than the one-size-fits-all juggernaut that your distribution provides. However, there are some important advantages to sticking with binary packages most of the time.

The first is convenience: downloading a large group of applications from a single site is faster and easier than downloading each from its developer's site (which is why we have distributions in the first place), and installing a binary package is much faster and less prone to mishaps than compiling from scratch. Convenience is not something we *nix bigots admit to valuing, but there it is.

The second advantage of packages is stability: the major distribution packagers put a good deal of testing and research into deciding whether to include a given application in their distribution, and if so, which version is the most stable and which compile-time options best suit their distribution. There have been notable exceptions to this, but most distributions nowadays do quite well with quality assurance.

Stability is unquestionably a big factor in security: where there are bugs there are vulnerabilities. Even bugs that don't have obvious security ramifications often can be exploited in, for example, denial-of-service attacks (the object of which is to crash a system, which some bugs do effectively).

This leads us to one of the more frustrating paradoxes in application security: although some of the most widespread vulnerabilities on the Internet stem from poorly maintained applications (i.e., obsolete and/or known-vulnerable versions), newer is not always better. The security community has rightfully lambasted Microsoft over the years for being slow to acknowledge and provide patches against security vulnerabilities in their products. But we complain equally bitterly when Microsoft does provide a speedy patch that affects stability because this potentially mitigates any benefit derived from fixing the original bug.

Ignoring for a moment stability's desirability in its own right, ask yourself this: supposing an application has an obscure buffer-overflow condition that is theoretically exploitable for root privileges (but only by a skilled assembly programmer with a working knowledge of the RC4 stream cipher), and you patch it with code that introduces a denial-of-service opportunity that can be exploited even by attention-span-deficit script kiddies—how much security have you really gained? The answer to this will vary depending on the precise circumstances and on who you ask, but the important point is that software upgrades often have ramifications of their own.

I'm beating this point to death, but it's an important point because it debunks the notion of instant software updates being some sort of panacea. (Plus it's been on my mind for a long time; it's taken me years to fully understand why, for example, the very latest version of OpenBSD still ships with a hack of BIND v.4.9.8, which in computer/dog years is really ancient.)

It's also important because, getting back to my original topic, there's something to be said for letting your Linux distributor make the difficult patching decisions, and thus for waiting to patch a vulnerable application until your distribution releases an official (and hopefully tested) patch, which of course is part of the deal when you rely on binary packages rather than cold hard source code. It's difficult enough to keep up with Linux distributors' application updates; I can only imagine how futile it would be to patch and recompile all my critical applications myself and waiting anxiously to see whether the patching broke anything else.

So to summarize these pearls of wisdom (at least I hope they're pearls, instead of some other small spherical secretion): keeping current is a virtue, using binary packages is a virtuous form of laziness (props to Larry Wall) and relying on secondhand security updates from your Linux distributor rather than “going cowboy” isn't laziness at all, it's prudence.

Before I talk about automatic update tools, let's talk about different kinds of systems for a moment. A bastion host connected to the Internet has a much more compelling need for the immediate application of security updates than a desktop system behind a firewall. Use common sense in deciding on which systems you're going to expend the necessary time and effort to keep strictly current, and for which systems you're willing to sustain a little risk by not being as painstaking.

Another thing to consider with regard to systems' roles is which hosts you can comfortably run an X-based update tool on such as YaST2. Bastion hosts (publicly accessible ones) shouldn't run X, generally speaking. If you really like YaST2's ease of use and overall convenience, run it in an internal system, configure it to save updates and push those updates out to your bastion hosts via scp.

Starting with Red Hat 6.2, you can use up2date to identify, download and install updated packages automatically. up2date runs in either text or X mode, so the “where may I run it?” conundrum described above doesn't really apply.

Before you can run up2date, you need to configure it. Luckily, there are two convenient and simple tools to do this. First, you need to use rhn_register to create an account on the Red Hat Network (RHN). If invoked without arguments, rhn_register runs in X mode, but you can run it in text mode by passing it the --nox flag:

bash-# rhn_register --nox

You can register one system with RHN for free, but subsequent systems registered to the same account require a fee. RHN registration is necessary for every host on which you wish to run up2date. There's nothing to stop you, however, from running up2date on one machine, saving the updated packages and pushing them to your other Red Hat systems, via scp or some other secure means, and installing them on those systems manually.

Another thing you need to know about RHN is that by default, a system registered with RHN will send RHN information about its network configuration and hardware, plus a complete list of all installed packages and their versions. This allows you to schedule automated updates to be sent from RHN to you and/or for customized e-mails to be sent to you whenever an update is available for packages installed on your system.

Those two RHN features may be very appealing to you, and if either makes the difference between your performing regular security updates, I for one won't criticize you. However, I personally am uncomfortable providing detailed lists of my system's setup to strangers without needing to. It isn't that I have any specific reason not to trust the fine professionals at Red Hat Network; it's just that I'd rather not have to trust them.

After all, it's really no big deal to me to subscribe to the Redhat-Watch-list, which Red Hat uses to announce new updates (listman.redhat.com), and to decide for myself whether it's necessary to run up2date. If it is necessary, up2date will automatically determine which updates are applicable to my system even if I haven't stored any system information at Red Hat. Therefore, my own practice (and recommendation) is to deselect the rhn_register options “Include information about hardware and network” and “Include RPM packages installed on this system in my System Profile”.

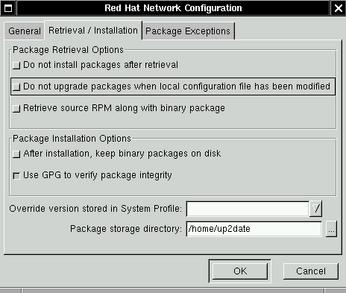

After you've registered your system with the Red Hat Network, you need to run up2date-config (Figure 1). Like rhn_register, this command supports the --nox flag if you wish to run it in text mode. up2date-config is for the most part self-explanatory, but several settings are worth mentioning.

Figure 1. Red Hat's up2date-config Tool

First, if you're going to download, store and distribute updates from a central system as described above, you'll want to select the option “After installation, keep binary packages on disk”, and specify (or at least note) the default value of “Package storage directory:”. If you run rhn_register with the --nox flag, these options are instead labeled keepAfterInstall and storageDir, respectively.

Second, be sure not to deselect the option “Use GPG to verify package integrity” (it's selected by default) unless your system is very slow. One of the niftier features of the RPM package format is its support of internal GPG signatures that can be used to verify the integrity of the RPM. up2date can do this automatically for you, provided GnuPG is installed on your system. If it is, you can also verify an RPM package's GPG signature manually like this:

rpm --checksig /path/packagefilename.rpm

where, naturally, you should replace /path/packagefilename.rpm with the full path of the RPM file you wish to check.

Sooner or later somebody will crack a Red Hat mirror site and replace some essential package with a trojaned version; when that happens, those of us who routinely check for valid RPM signatures will be far less likely to be stung.

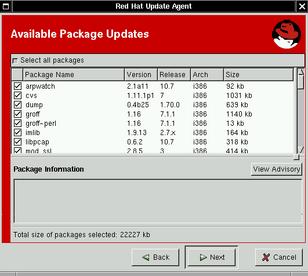

Once you've registered with RHN and run up2date-config, you can run up2date itself (Figure 2). There's not a lot to say about this; the whole point of up2date is simplicity and convenience, so you don't need me to tell you how to click its clearly labeled buttons.

Figure 2. Part of an up2date Session

I will repeat, however, my earlier advice: sign up for the Redhat-Watch-list and run up2date whenever an update is announced that is applicable to your system. By using such a slick and user-friendly tool, you have little excuse not to.

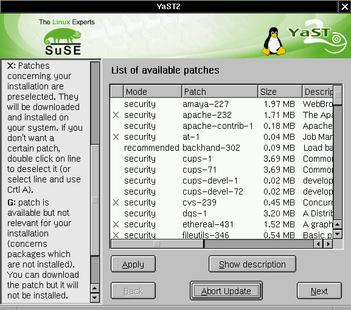

If you use SuSE Linux, you've got an even easier-to-use tool at your disposal for automated updates: YaST2, with its on-line update module (Figure 3).

Figure 3. Running On-Line Update from YaST2

Unlike Red Hat's up2date, you don't need to register with SuSE to run this, nor do you need to use or edit a separate file to configure it. The on-line update is self-contained, and in the first couple of screens of an on-line update session you can change its configuration if needed (e.g., you can select a download site that's geographically closer to you than SuSE's US-based ftp.suse.com server).

In order to know when to run the on-line update, you should subscribe to SuSE's suse-security-announce mailing list. Like the Redhat-Watch-list, this is a low-volume e-mail list, so don't worry about SuSE spamming you with frivolous notes. To subscribe see www.suse.com/en/support/mailinglists/index.html.

Honestly, there isn't much else I need to tell you about YaST2/on-line update, except for one minor problem I've had with it (an end-user error, actually, but an easy one to make). If you invoke the command yast2 manually from an xterm or a “Run command” dialog and your X session isn't being run as root, the on-line update will fail, returning a misleading error about being unable to locate the update list on the specified FTP server.

This isn't the case; actually, YaST2 needs to run as root in order to write this file after obtaining it from the FTP site. That doesn't mean you must run YaST2 only from a root X session; it means that if you don't, you should use the menu item automatically created by SuSE for YaST2, in which case you'll be prompted for the root password and the on-line update will work properly.

In my enthusiasm for up2date and YaST2, I haven't yet mentioned one other simple method for updating RPM files: the rpm command itself, which works equally well on Red Hat (and its derivatives) and SuSE. The easiest way to illustrate this method is with an example.

Suppose you receive notification of a vulnerability and available update for the fictitious SuSE or Red Hat package blorpflap, and you use the URL provided in the notice to download the updated RPM to the local path /usr/pkg/updates/blorpflap-3.2-3.rpm. First, you should verify its validity:

rpm --checksig /usr/pkg/updates/blorpflap-3.2-3.rpm

Naturally, for this to work, your distribution's signing key will need to be on your GnuPG public keyring. See my two-part series on GnuPG in the September and October 2001 issues of Linux Journal for a tutorial on using GnuPG.

If the GPG signature checks out okay (or if you assume that it does, which you're free to do at your own risk—the above step is optional), install the update:

rpm -Uvh /usr/pkg/updates/blorpflap-3.2-3.rpm

-U is short, of course, for update (actually update or install), and it works for both updates to previously installed packages and for new packages; -v makes the action verbose, which I like; and -h tells rpm to print out a little progress bar.

The last tool we cover this month is Debian's apt-get. As seems to be characteristic of Debian, apt-get is less flashy but in some ways even easier to use than other distributions' fancy GUI-driven equivalents. In a nutshell, there are only two steps to updating all the deb packages on your Debian system: 1) update your package list and 2) download and install the new packages. Both steps are performed by apt-get, invoked twice:

bash-# apt-get update bash-# apt-get -u upgrade

The second command tells apt-get to use wget to download updated packages and then to install them.

To receive e-mail notification of Debian security vulnerabilities and updates, subscribe to debian-security-announce by filling out the on-line form at www.debian.org/MailingLists/subscribe. Run apt-get whenever you receive notice of a vulnerability applicable to your system.

By the way, as much as I love apt-get, there is one important feature missing: GPG signature validation. This is because unfortunately, the deb package format doesn't support signatures and because Debian packages aren't presently distributed with external signatures. Reportedly, a future version of the deb format will support GPG signatures.

That's it for this month. Good luck keeping current and staying sane!