In outer space, on the ground, and in the classroom: some exciting real-world applications developed with Linux by students and researchers at the University of Colorado in Boulder.

The Linux operating system (OS) coupled with powerful and efficient available software development tools, has been the platform of choice for several research and commercial projects at the University of Colorado located in Boulder. The projects include several payloads that have flown seventeen sortie missions on the NASA Space Shuttle. The payloads were built by BioServe Space Technologies, which is a NASA Center for the Commercialization of Space (CSC), and is associated with the Aerospace Department within the College of Engineering. BioServe's payloads have logged over a full year of combined operation in micro-gravity, including two extended stays on the Russian Space Station Mir. Student projects include an unmanned ground vehicle (UGV), which navigates autonomously based upon its assessment of dynamic environmental conditions.

Linux has proven to be an invaluable teaching instrument from a pedagogical standpoint at our university. Several classes are taught in the Aerospace Engineering Sciences and Computer Science departments that take advantage of the sophisticated and robust features of the Linux OS, not only as a development platform but as a method to teach and implement advanced topics in hardware/software integration, control theory, operating systems research and systems administration. This article details several of the projects from a scientific and engineering perspective and provides an overview of common software methodologies used in the development of the complex control schemes necessary for reliable and robust operation.

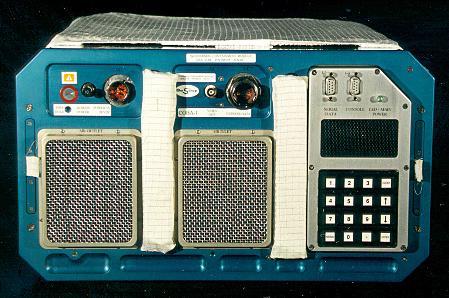

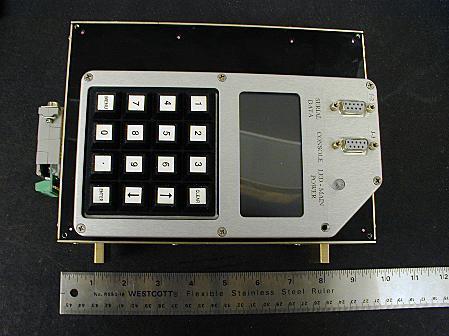

BioServe Space Technologies has built and flown several payloads that use the Linux operating system to interface with the control hardware, provide support for operator input and output devices, and provide the software development environment. The workhorse of the BioServe payload fleet is the Commercial Generic Bioprocessing Apparatus, CGBA, shown in its version 1 configuration in Figure 1. The operator data entry and visual feedback devices are located on the front panel. The keypad and mini-VGA screen are attached to an embedded control computer running Linux. The CGBA payload operates as an Isothermal Containment Module (ICM) with the capability to precisely control chamber temperature from 4 to 40 degrees C. Inside are the biological and crystal growth experiments loaded before launch. CGBA is a “single locker” payload (approximately 21"x17"x10") for insertion into either the Shuttle mid-deck or into large payload modules that fly in the Shuttle cargo bay.

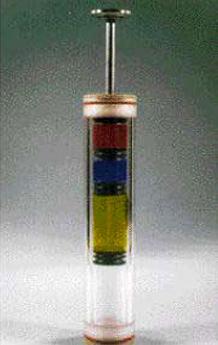

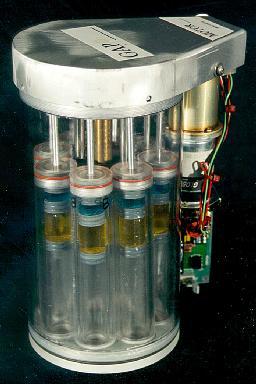

The Fluid Processing Apparatus (FPA) shown in Figure 2 is the fundamental experimental unit of the CGBA payload. It is essentially a “micro-gravity test tube” which allows controlled, sequential on-orbit mixing of 2 or 3 fluids. The fluids are contained inside a glass barrel with an internal diameter of 13.5mm. Up to eight of the FPAs are loaded into a motorized Group Activation Pack (GAP), shown in Figure 3. Control software initiates the mixing of liquids containing different cell culture mediums at specified times once the payload has attained micro-gravity. A second motor initiation, which depresses a plunger in a manner similar to a syringe, combines the cell culture mixture with a fixative reagent, which squelches further biological reaction. The flight samples are then analyzed in comparison with identical samples in ground-based experiments to determine the effects of micro-gravity upon fundamental biological processes. Pharmaceutical and crystallization investigations are common candidates for these types of experiments; a small increase in the efficiency of a reaction can lead to a significant increase in the desired products, and thus to a company's profit margin.

Figure 1. Commercial Generic Bioprocessing Apparatus

Figure 2. Fluid Processing Apparatus

Figure 3. Group Activation Pack

A second version of the CGBA payload highlights a configurable internal palette, upon which several different habitat chambers can be built. This payload contains multiple video cameras to provide time-sequenced image recording of the habitat contents. BioServe has used this payload configuration, in conjunction with SpaceHab, Inc., to provide exciting space-based educational outreach to primary and secondary school students across the world. SpaceHab's Space Technology and Research for Students (S*T*A*R*S) program supports a wide array of potential experiments for investigation.

In July of 1999, two experiments flown in the payload onboard Shuttle mission STS-93 involved students from high schools in the United States and students from a secondary school in Chile. As shown in Figure 4, this payload had three center habitats which contained ladybugs and aphids while the larger chamber in the front left housed butterflies. The first experiment, of interest to students in the U.S., involved the incubation and development of pupae larvae from a crystalith (cocoon) to the maturation stage of a butterfly. The second experiment, designed entirely by a group of teenage girls in Chile, investigated the effects of micro-gravity upon the predatory relationship of ladybugs and their favorite prey, the aphid, which is notorious for its devastation of food crops. Figure 5 shows aphids, the small black objects on immature wheat plants before the introduction of predatory ladybugs into the habitat chamber. The same habitat after the release of the ladybugs is pictured in Figure 6, showing a (expected) decrease in aphid population. Note the ladybug in the middle right of the image as it attempts to catch an aphid in microgravity. The results of this experiment hope to gage the potential for using natural predator/prey relationships as a means to minimize detrimental insect destruction of both terrestrial and space-based food crops.

Figure 4. The CGBA-02 Payload for S*T*A*R*S

Figure 5. Habitat with Aphids on Wheat

Figure 6. Same Habitat after Introduction of Ladybugs

The second S*T*A*R*S flight, to be flown aboard Shuttle flight STS-107 in 2001, will support an international array of educational science experiments. Participation in this program is literally worldwide. The educational departments of numerous countries submitted proposals for selection, from which six experiments have been chosen. Australia will fly an AstroSpider experiment, which is a collaboration between Glen Waverely Secondary College and researchers from the Royal Melbourne Institute of Technology. They will investigate how the mechanical properties of the spider's silk, which can be 100 times stronger than steel, differ when it is produced in microgravity. Jingshan High School in China has proposed an experiment which will investigate one of their country's icons, the silkworm. Their experiment will attempt to reveal the effects of microgravity on silk production and the metamorphosis process as the silkworms transform from worm-like larvae, spin cocoons and then emerge as adults. Two of the new S*T*A*R*S experiments will be a joint effort involving investigators in Japan and the United States. Both groups will each fly an enclosed, aquatic ecosystem that contains plants and tiny aquatic animals. They hope to investigate the navigation and locomotive processes of these animals as they swim in microgravity. Israeli science students from Motzkin Ort Junior High School are designing their experiment to investigate the formation of crystals in microgravity. They hope to see differences in the shape, structure, strength and size of space grown crystals over similarly grown, earth-based crystals. The final participant in this flight is Fowler High School from Syracuse, New York. In conjunction with Syracuse University, they are designing a zero gravity ant farm. They hope to chart differences in the species' well-characterized social activities brought on by the disorientation of space flight. Recently, a third S*T*A*R*S mission has been scheduled for the year 2002 onboard the International Space Station, which will allow longer-term scientific investigations to be conducted.

The CGBA payload, in its version 3 configuration (see Figure 7), allows for individual temperature control of each GAP container by coupling it to a heat sink (water loop) with thermoelectric modules and insulating each GAP from one another. The water loop is distributed on the top and bottom of this locker to virtually eliminate thermal gradients and to provide efficient temperature control of the individual sample container. These individual temperature controlled containers allow for time sequenced experiment initiation and termination. The National Institute of Health will fly an experiment investigating the development of fruit fly maturation, from larvae to adults, using this configuration. The fruit fly maturation process can be effectively controlled by warming Petri dishes contained in the individually temperature controlled GAPs at different times using pre-programmed temperature profiles. The fruit fly has a scientifically well-documented development process. Scientists have been able to genetically splice fruit fly neurons with fluorescent markers and are interested in the development of neural based muscular activity. The research is aimed at investigating the difference in neural pathway development between space-based samples as compared to similar ground-based control samples. The scientific goal is an increased understanding of how gravity affects neural development.

Figure 7. CGBA-03 Payload with Temperature Controlled Containers

The Plant Generic BioProcessing Apparatus (PGBA) (see Figures 8 and 9) is twice the volume of the “single locker” CGBA payloads described previously. It is designed to precisely control the environmental conditions of temperature, humidity and oxygen/carbon dioxide levels within the plant growth chamber for fundamental space-based research in the plant sciences arena. Plant science is fundamental for many large commercial entities including the pharmaceutical and timber industries. Both of these commercially important industries are, in part, based upon plant-produced compounds. For example, the timber industry funds significant research programs investigating the production of lignum which is responsible for providing structural strength for both plants and trees. From a commercial perspective, lignum is undesirable for the formation of paper products but a desirable constituent for strong timber products. In micro-gravity, a plant's need for structural strength is greatly diminished. Current research focuses upon the change in lignum production in micro-gravity based plants and trees to determine potential methods for regulating the compound's terrestrial in situ production.

Figure 8. Front Panel (PGBA) with Touchscreen in Lower Left

Figure 9. Wheat Plants in Environmentally Controlled PGBA

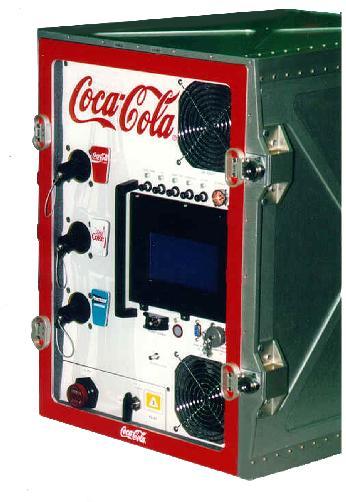

The Fluid Generic Bio-Processing Apparatus (FGBA) (see Figure 10) is interesting from both the research and commercial perspectives. FGBA was developed in conjunction with the Coca-Cola Company and was flown onboard STS-77 in 1996. FGBA was used to provide basic research about human taste perception, which changes in micro-gravity. The payload also investigated fundamental relationships pertaining to two-phase fluid flow and dispensing in microgravity, as the beverages were mixed and dispensed using pressurized carbon dioxide in a manner similar to earth-based soda fountains. From the commercial perspective, this payload demonstrated the potential for the funding of space-borne projects based not only upon their fundamental research potential, but also on their capability to bring commercial advertising dollars to the cash-strapped International Space Station.

Figure 10. (FGBA) Developed with the Coca-Cola Company

In all of the payloads, an accelerometer-based system is used to detect launch, thus allowing experiment initiation (motor-activation, temperature change, lighting conditions) immediately upon entering orbit. Additionally, automatic experiment termination can be programmed to occur at any time during the mission, including just prior to reentry, based on pre-planned (or updated) Shuttle end-of-mission time. These two capabilities combined allow an early-as-possible experiment initiation and a late-as-possible termination, since those are periods when astronaut crew availability for payload tasks is typically at a minimum.

Linux was chosen as the operating system for the payloads onboard the Shuttle (and soon to be International Space Station) missions for several reasons. First and foremost is its high reliability. In several years of developing different payloads, we have never encountered a problem that was operating system related. Other reasons for use of the Linux OS include:

Ease of custom kernel configuration, which enables a small kernel footprint optimized for the task at hand;

The ability to write kernel-space custom device drivers for interfacing to required peripheral hardware such as analog and digital I/O boards or framegrabbers;

The ability to optimize the required application packages resident on payload non-volatile storage media;

The availability of a powerful software development environment;

Availability of the standard UNIX-like conventions of interprocess communication (IPC) methodologies, shell scripting and multi-threaded control processes.

With the recent advances and capabilities of real-time variants of the Linux OS, critical real-time process scheduling is available (if needed) for incorporation into the software/hardware control architecture.

Because of the limited volume of these complex payloads, it is necessary to minimize the volume of every hardware item, including the communications and process control computer. In our payloads, we use a combination of small single board computers (SBC) and PC-104 form factor boards to supplement the capability of the SBC. In particular, we have had great success and outstanding reliability from Ampro, Diamond Systems, SanDisk and Ajeco products.

The computer hardware architecture we have designed and developed is generic in that the same architecture is used for all of the different payloads. The single board computer provides the CPU, FPU, video interfaces, IDE and SCSI device interfaces, along with the serial and Ethernet communications interfaces (see Figures 11 and 12).

The inside of the control computer assembly, with a single board computer and stack-on PC-104 module, is pictured in Figure 11. The unit is 6 feet wide and 2.5 feet deep. Figure 12 shows the outside front of the control computer assembly with the keypad and mini-VGA attached. Analog and digital IO capabilities, necessary for interfacing to control hardware and sensors, are implemented via an additional PC-104 module. Imaging of the experiments within the payloads is accomplished via multiple cameras interfaced to a PC-104 frame-grabber. This generic hardware architecture allows for the integration of a complex array of sensors and control actuators. Additionally, distributed processing is implemented by attaching micro-controllers over a serial RS485 multi-drop communications bus, which facilitates the addition of new control hardware in a modular manner.

Figure 12. Keypad and Mini-VGA Screen

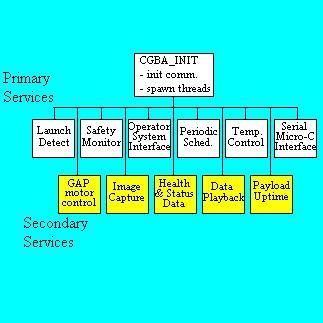

One tremendous advantage provided by the true multi-processing Linux environment is the relative ease of software development as compared to monolithic DOS systems. A similar generic, multi-threaded software control architecture is used for process control and communications for all of the projects described in this article and shown in Figure 13.

The software architecture follows the multi-threaded peer model. At start-up, the thread responsible for initialization of the entire system first spawns the processes required for payload-to-groundside communications. Next, shared resources are requested and initialized. Communication and synchronization control variables are initialized, and then a separate thread is created corresponding to the different services required from the payload. For our payloads, these services are categorized as either primary or secondary services. Primary services include those tasks which are required for the fundamental control of the payloads. Primary services for the different payloads tend to be identical in functionality, but the methods of hardware implementation can differ greatly. Secondary services are those specific to a particular payload.

From the development standpoint, the use of threads in a proven multi-process architecture facilitates multi-programmer development, module reuse and a much faster test-and-debug cycle, since processing of individual threads is easily enabled or disabled. This allows for complete testing of a single subsystem, say the chamber temperature control, in a stand-alone manner. Once completely tested, the subsystem is incorporated into the overall payload control architecture, thus minimizing adverse effects of integration.

Figure 13. Simple Chart of Software Architecture.

The communications system is implemented in a modular manner as well. Data transmission is abstracted at the programming interface level as different messages (e.g., analog data, health and status messages, images, etc.). These different length messages are termed “channels”. A thread that wishes to transmit a message to ground stations, queues (writes) a dynamic message of pre-defined length to a specified channel (see Figure 14). The channeling system prepends channel routing information and a packet checksum. Multiple channel messages are combined into a secondary packet to maximize usage of available bandwidth. This secondary packet is termed a “RIC” packet; it serves as an interface abstraction to the Rack Interface Computer which is used onboard the International Space Station to communicate to the different payloads. RIC packet contents are also sanity checked via an additional checksum. Finally, the RIC packet is encapsulated into a TCP/IP packet for delivery to the payload.

Communication between the RIC and the payloads is TCP/IP based for reliable communications. Unfortunately, telemetry data transmitted from the payload to the RIC ends up being distributed on the ground from the Marshall Space Flight Center to the remote users via UDP, which is unreliable. In addition, the physical communications link in the form of the TDRSS satellite network is subject to outages as well. To minimize the potential impact of dropping packets and loss of signal caused by holes in the available TDRSS satellite network coverage, a “playback” system has been implemented that retransmits critical information to improve the probability that a complete set of telemetry data files is received properly by the ground stations.

Finally, one of the channels has been configured to serve as a remote shell on the payload. Input to and output from the bash shell is first redirected to a channel and then packetized as described above. In this manner a true remote shell operates on the actual payload in a manner that is functionally equivalent to establishing a remote connection via TELNET or ssh. The bash shell input and output is “tunneled” through the NASA communications scheme and allows the possibility of remote system administration activities from the ground. Just try and implement that capability under Windows! For further information concerning the design of the communications subsystem, please see the excellent article “Linux Out of the Real World”, by Sebastian Kuzminsky, which appeared in Linux Journal in July of 1997.

As the economy of the United States transitions from the Industrial Age to the Information Age, students from all engineering disciplines are required to enable the automation and control of large and small industrial production processes. The per dollar cost of storage media, CPU cycles and interface hardware continues to decline, allowing research agencies and for-profit companies to implement automation of their production processes in an effort to decrease costs and increase profit margin. At the College of Engineering and Sciences, located on the main campus of the University of Colorado, a new teaching paradigm has been realized with the completion of the Integrated Teaching and Learning Laboratory (ITLL). The ITLL was built as a resource for students of all engineering disciplines to facilitate the transfer of theory learned in the classroom to actual practice in the laboratory, in an effort to teach real world engineering and scientific skills.

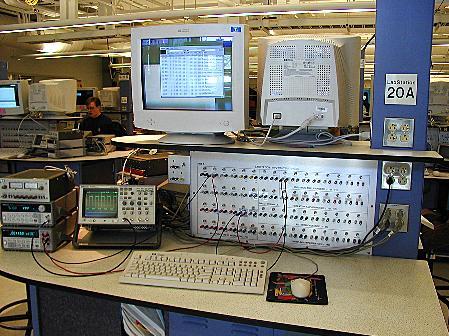

The ITLL provides the resources to allow students to design and build complex engineering projects. A typical lab station is shown in Figure 15. Each of the lab stations is equipped with a Hewlett-Packard Vectra PC with a 500MHz Pentium-III processor, 384MB of RAM, an 8GB hard drive, the usual NIC and a National Instruments analog and digital data acquisition card. Several of the 80 available systems have been configured to boot Linux and RTLinux, in addition to Windows NT. Standard test equipment at each lab station includes a waveform generator, digital multi-meter, variable-voltage power supply and oscilloscope. The breakout panel provides easy access to analog and digital IO signals and to signal-conditioning hardware. The availability of sophisticated test equipment, coupled with the capabilities of the Linux and RTLinux operating systems, has enabled students to design and construct complex, real world applications integrating both hardware and software to accomplish a specified engineering or scientific task.

Figure 15. An ITLL Workstation Running Linux/RTLinux.

One such project is the University of Colorado's Robotic Autonomous Transport, a.k.a. the RATmobile, shown in Figure 16. The RATmobile is an unmanned ground vehicle (UGV) that navigates autonomously by sensing static and dynamic features within its environment. This vehicle was designed entirely from scratch by a group of undergraduate and graduate students from several engineering disciplines with supervision and guidance from members of the Aerospace department. Design and construction of the vehicle is truly multi-disciplinary, calling upon the theory and expertise of mechanical, electrical, aerospace and computer sciences.

The vehicle uses miniature video cameras mounted on the front to supply visual information concerning the surrounding environment. Static and dynamic obstacle detection is supplemented by an array of ultrasonic sensors. The sensed environmental conditions are combined into a local coordinate frame and then translated into a world representation map that contains the totality of the robot's knowledge concerning its environment. Depending upon the task at hand, the robot is able to make use of different navigational algorithms to traverse to a desired destination.

One such navigational mode allows for the vehicle to navigate an obstacle course that is delimited by painted white lines on a large field. The lines are approximately ten feet apart with straightaways, sharp curves and bends and even some occurrences of missing lines, which require that the navigation algorithm predict the desired vehicle direction based upon historical information. A second navigational mode makes use of differential GPS information for precise assessment of the robot's position in a world reference frame. In this mode, the vehicle has a priori knowledge of its static environment and can navigate to a predetermined location while avoiding unmapped or dynamic obstacles. Different navigational strategies, such as the optimization of path length or required path energy, are easily implemented.

Linux was chosen as the operating system for this project because it met all design requirements, allowed for a multi- user development environment and was free, which is of concern to students with a limited project budget. The capability of the multi-threaded software control architecture provided by Linux allows for easy implementation of differing vehicle capabilities. The primary functions of the vehicle, visual image acquisition; obstacle detection information acquisition; combination of input sensory data into a world representation; and the determination of the required navigational control outputs of velocity and steer angle, are all abstracted to different threads. If necessary, the CPU scheduling policy associated with different processes can be altered from the default scheduler, which optimizes for the average case, to allow for improved real-time performance under the standard Linux OS. In process control systems, where millisecond and below guaranteed scheduling is required, a real-time Linux variant, such as RTLinux, can be used.

Figure 16. The Robotic Autonomous Transport (RATmobile)

The ITLL stations, running either Linux or RTLinux, are also used in a class emphasizing hardware/software integration skills to undergraduate and graduate students. Advanced programming constructs, in the C and C++ languages, are required by laboratory exercises that emphasize the integration of real hardware, such as motors and micro-controllers. This allows students to develop confidence and expertise not just in proper software programming practices, but in combining control hardware with the standard PC. Examples of final student projects (all using Linux or RTLinux) in this class include:

A working mockup of a spin stabilized satellite that uses image processing to determine orientation within a star field.

A directionally controlled scanning laser used to determine mechanical vibrational modes within a structure.

Transmission of real-time accelerometer data from a radio controlled airplane with subsequent real-time plotting capability.

The construction of a fluid chamber to model fluid flow through different chambers of the heart with associated real-time data gathering for analysis.

Using the Linux OS and user-configurable development environments as a basis, we are able to impart a greater breadth of knowledge to our students, which is applicable to real world engineering problems. That is a true accomplishment whose accolades belong to the numerous kernel and development programmers whom have contributed to the power and success of Linux.

Linux also serves as a powerful teaching tool within our Computer Science department. The free availability of the Linux OS and associated development packages have opened the doors to a greater variety of course content in classes pertaining to Systems Administration. With a free operating system and the availability of cheap PC hardware, students have the ability to configure and administer all aspects of their very own system within a university computer lab. If something goes dramatically wrong, it is a simple matter to restore the entire disk across a network from a master system. This encourages students to experiment with no disastrous repercussions. Topics such as disk-level file recovery, device installation and management, user and file administration, kernel configuration and networking are all candidates for easily constructed exercises using the free Linux OS as the enabling technology.

Open source distribution of the Linux kernel source tree has allowed courses in Operating Systems Theory to not only teach the fundamentals but, even more importantly, allow the students to experiment with changes to the kernel code. As a result, students are offered a richer set of exercises that can be applied directly to the kernel source and not just on paper. Most assuredly, students will break their systems while experimenting, but system recovery strategies have definite educational value—better now on a cheap PC running Linux than on some multi-million dollar platform in the future. Having the kernel source allows for in-depth exercises in far-ranging topics including management of processes, resources, devices, files and memory, as well as implementation of kernel-space processes as Linux modules, scheduling policies and priorities, and fundamental synchronization methodologies. Linux enables a richer and more realistic educational experience for our students.

When designing and building complex systems, which require low-level integration of software and hardware, there are numerous design trade-offs and decisions to be made. Two primary criteria that are always evaluated are: can the system meet the specified performance requirements, and can the system meet imposed budgetary constraints? Based upon past experience, both Linux and its real-time variants meet or exceed the typical performance metrics. The development environment is powerful and easily configurable. Operating system services are ample, and resource abstraction follows the standard, simple, UNIX-like methodology. One design issue that is of fundamental concern to companies within the commercial sector is that of technical support. With the advent of Linux-only technical support companies, along with an increased base of competent Linux programmers, this issue is dwindling in comparison to the numerous advantages of a free, open-source operating system. Budgetary constraints are a non-issue. A world-class group of kernel hackers continue to provide support for cutting-edge technologies and hardware. Students of today, who are the programmers and systems managers of tomorrow, are being taught to harness the enabling power of Linux.

Linux is used for a myriad of research, commercial and educational purposes at the University of Colorado. The systems designed and built are complex and, must be reliable. The payloads BioServe builds are currently scheduled to spend over three years in combined time onboard the International Space Station, and computer control, implemented under the Linux OS, is a mission-critical design specification. From the performance of past payloads, in addition to the complex projects previously described, the author is confident of continued success. Linux is the enabling resource that allows the integration of these projects. Linux has provided meaningful content for several courses taught within our Aerospace and Computer Science departments and is responsible, in part, for the success of our real-world teaching paradigm, which takes theory out of the classroom and allows for practical implementations of advanced projects and research.