A free programming system for high-end interactive computer graphics.

GNU Maverik is a system to help programmers create virtual environments (VEs). It was built by an academic research group to tackle some of the problems found when using existing approaches to VE construction. Maverik's main contribution is its framework for managing graphics and interaction, but it comes with extensive built-in functionality to make getting started straightforward. However, Maverik is a tool for programmers—it is not an end-user application.

Maverik is part of the GNU project and is distributed freely with full source under the GNU GPL. The distribution includes documentation, a tutorial and examples. Released in February 1999, Maverik has been downloaded by thousands of sites worldwide, and received positive feedback from both academic and commercial organizations.

In this article, I give some background to the challenges facing the designers of Virtual Environments and then describe how Maverik addresses some of these.

It's easy to get enthusiastic about interactive 3-D graphics, and to imagine great things—walking around inside your dream house; conversing face to face with a distant friend in a shared paradise; or rehearsing complex engineering procedures with professional colleagues around the world in a shared virtual environment.

These things are easy to imagine, but surprisingly hard to do—witness the lack of really compelling examples of this kind of technology in use. So what's the problem? Until quite recently the limitations of computer hardware and VR peripherals were seen to be the limiting factor. In the last year or so, PC graphics accelerators have made dramatic strides forward as 3-D becomes a more mainstream facility. Today inexpensive 3-D PC accelerators rival the performance of the most expensive 3-D workstations, and are beginning to include options such as economic stereo shutterglass support.

The limiting factor—the gap between what we can so easily imagine and what we can readily achieve—is now more clearly exposed as being software. Writing 3-D applications is hard work. It takes hundreds of person-hours (programmers and artists) to create the impressive games and animated film sequences we have come to expect of 3-D computer graphics. Yet film animations such as Toy Story use techniques that are way beyond what can be achieved at interactive framerates. The best of today's PC graphics cards is something like 10,000 times too slow to do Toy Story in real time. That is largely due to the complexity of the modeling and sophisticated lighting calculations used. It is possible to do impressive things in real time, as computer games demonstrate. But games rely upon methods such as texture mapping to give the illusion of complexity within a VE far beyond what is actually present. In a game, the complex metalwork and scenery that you see are mostly stage scenery: you cannot take the girder away from the wall, it's only a picture of a girder.

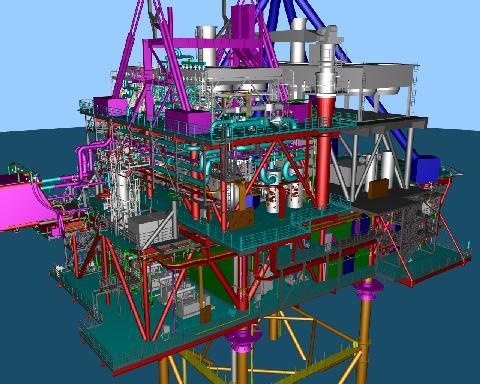

In contrast, for real engineering tasks, the complexity of the CAD models can be staggering—a model of a real offshore platform can, for example, equate to half a gigabyte of polygonal data which must somehow be processed 10-30 times per second if it is to be any use for interactive work in a VE (see Figure 1).

Figure 1. Offshore Platform

Let's think about the kind of things that need to be done to work with a model of this complexity; this will help understand the reasoning behind Maverik. To cope with the offshore platform, we need to find ways of rapidly focusing in on only those parts that need drawing at any one instant. For example, in a building with many rooms, you can quickly discard large parts of the model that you know cannot be seen from the current position. In a large “cityscape” at ground level, we could work out which buildings you can see that are not occluded by others, and rapidly discard everything else. So some application-specific tests can quickly discard a lot of irrelevant data. In some cases, those do not work; for the offshore platform, we can see between the pipes all the way across the rig, so we need a different strategy. In the limit, though, we will run out of time to render the current frame, so some bail-out option is necessary. One example would be to fall back to a wire-frame representation for more distant parts of the view (see Figure 2). That does not make such a nice still picture, but if it lets you work interactively within the VE, then it might be tremendously effective compared to a “jerky” presentation.

A promising approach is to replace the wire-frame portion with image-based rendering techniques, filling in the background with appropriate still pictures of the rig. These stills are then dynamically distorted so you do not notice that they are stills. That is a subject of current research. For a VE, it is important to make any such transitions between representations smoothly, and avoid objects “popping” between different rendering styles, which is quite disturbing.

Most of these techniques are well understood individually, but the particular combination of tricks employed to get enough speed are often highly application-specific. Putting together each frame, whilst managing other issues such as user navigation and application behaviour, is quite a challenge. At present, if you want to attempt this, you have two basic strategies available.

First, you could program the whole thing from the ground up in your favourite language and a 3-D graphics library such as Mesa or OpenGL. This gives you a lot of flexibility—you can do anything that's possible, and at the best performance attainable. The drawback is that for a sophisticated task, this can be like programming an operating system in assembler. It's too low a level, and hence hard to re-use what you write for different kinds of applications.

Your second choice is to use a proprietary package for building Virtual Worlds. Such a package will get you going quickly, and hopefully has built-in high-level support for your desires—for example, making your VE sharable between several users. To support these high-level features, the VE package has its own internal complexity and representations of the VE, which is, after all, what you are buying. The drawback here is that the complexity within the VE system may not be appropriate for what you want to do (i.e., a “one size fits all” approach may not work). If you have a complex application, it will have its own data structures and algorithms tuned to that application. In the case of the offshore platform, the CAD system that built it talks in terms of ladders, pipes, valves and so on—quite high-level descriptions. To use this data with the VE building package, it will have to be exported from the CAD system, and imported in whatever format the VE system understands internally. The common denominator is, more often than not, a “sea of polygons”. So in exporting the data into the VE system's general-purpose format, much of the interesting information on what those polygons mean is lost, and with it the potential for exploiting that information to win speed.

A second problem with this approach is that if someone within the VE manipulates the objects (disconnecting a pipe or valve for example), then somehow that has to be communicated back to your CAD application which must then update its own data structures. So you get two representations of the world, one within the application, and one within the VE system, and these have to be kept synchronized.

So an “off the shelf” VE building package does what it does, well. But as the desire for complex VEs increases, we encounter problems of programming complexity, and the inherent performance limitations of maintaining two different models of the data—one of which is probably an unwieldy sea of polygons.

Is there any middle way possible? Can we construct some framework that supports the creation and management of VEs, without limiting what can be built to some lowest common denominator?

We believe there is, and the result of those efforts is GNU Maverik. A framework for interactive VEs has now been used successfully to implement a range of VE applications with markedly different demands and optimization strategies.

Maverik is a C toolkit that lives on top of a 3-D rendering library such as Mesa or OpenGL, providing facilities for managing a VE. It is best viewed as the next layer of functionality you would want above the raw 3-D graphics library. The novel aspect of Maverik is that it does not have its own internal data structures for the VE. Instead, it makes direct use of an application's own data structures, via a callback mechanism (rather like callbacks are used in window managers such as X11).

An application tells Maverik about the classes of objects it wishes to render, and supplies functions needed to do the rendering. Maverik supplies facilities for building flexible spatial management structures (SMSes) with which the registered objects are managed. As the user navigates the environment, Maverik tracks the SMSes and makes appropriate calls on the application to render its objects.

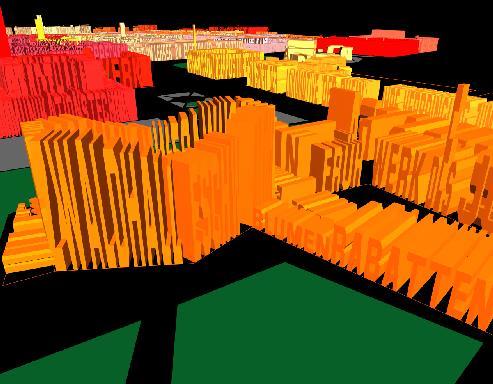

The application keeps control of its data, so there is only one copy to manage. More crucially, the application can bring all its knowledge of what the data means to bear when deciding how to render the objects; we find this is where the most telling optimizations come from. For example, if 3-D text is important to the application, then a good data structure would probably involve text strings and position information. However, exporting the 3-D text to a self-contained VE package will probably mean exporting the data in a common graphics format—usually polygons. The VE system will have a lot more data to process than we would like, which limits the size of environment we can construct.

With Maverik, the application might register the class “3-Dstring”. Later, when the user navigates around the environment, Maverik determines from the SMSes that certain objects are in view. For 3-Dstring objects, it calls the application supplied functions to render those, and the application then generates the polygons on the fly. The application can use whatever optimizations are appropriate when generating the polygons, which makes techniques such as dynamic level of detail, and coping with objects that change their shape, straightforward. In this way, Maverik can be a general-purpose framework, and yet benefit from optimal data representations and highly application-specific optimizations.

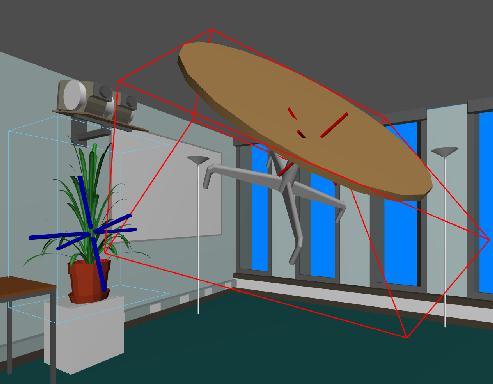

The 3-D text example is part of a real application—the “Legible City II”, a multimedia artwork by Jeffrey Shaw (ZKM Germany). It uses the above technique to render three large cities (Amsterdam, Manhattan and Karlsruhe) of words (see Figure 3). To render a more game-like environment, the application callbacks will use the more traditional games techniques to render their objects (see Figure 4).

Figure 3. Legible City II Multimedia Artwork Implemented in Maverick

Figure 4. Special-Effects Techniques Common in Games

For the Oil Rig, the CAD application's native data structures (pipes, valves, walkways and so forth) are used in a similar way. When the application is called to render a tower, it can decide what would be an appropriate rendering for this frame—for example, it may decide to always render the tower, because it is an important landmark for navigation. Thus, the application can change representations dynamically to suit conditions. Similarly, applications with special lighting algorithms (see Figure 5), or atypical behaviours such as abstract information visualization (see Figure 6) use their own bespoke structures and algorithms to maintain real time performance.

Figure 5. Radiosity Lighting Model

Examples of work done with Maverik are given in the Maverik gallery on the web page. The important feature is that the video sequences (which are quite large files) are all captured directly from a 450MHz PIII Linux PC using a Voodoo2 3-D accelerator, and give a good impression of what performance can be attained with a cost-effective hardware setup.

What we have gained is the flexibility to plug together quite different applications within a single VE framework, without compromising performance. That is a gain, but seems to be at the expense of having the application (and application programmer) do all the hard work. However, because Maverik is a framework into which things can be plugged with little performance loss, it is easy to provide commonly useful facilities and objects to get many applications off the ground. For example, Maverik is distributed with libraries of common geometric primitives, cones, cylinders, teapots, an animated avatar, sample graphics file parsers, navigation facilities, 3-D math functions, quarternion codes, stereo, 3-D peripheral drivers for head-mounted display and 3-D mice (see Figure 7) and so forth). If these are useful, then you use them as a good starting point. If they are not, then you provide your own objects and algorithms, perhaps refining the samples provided.

Figure 7. Immersive Editing

This makes it fairly straightforward to get started using the system. In practice, this gives three levels of increasingly sophisticated use.

Using the default objects and algorithms provided. Used this way, Maverik looks like a VR building package for programmers. The tutorial documentation for the system leads one through the building of an environment with behaviour, collision detection and customized navigation.

Defining your own classes of objects. This is where you benefit from application-specific optimizations that you could supply by providing your own rendering and associated callbacks. The tutorial gives an example of this, and the supplied demonstration applications make extensive use of the techniques. Unfortunately, the offshore platform example uses commercially sensitive data, so cannot be supplied with the distribution.

Extension and modification of the Maverik kernel. Rendering and navigation callbacks are one set of facilities that can be customized. For the adventurous, more of the Maverik core functionality is also up for grabs: for example, alternate culling strategies, spatial management structures and input device support. But this still takes place within the context of a consistent framework that seems to make it easy to plug different parts together.

The real test for Maverik as a research vehicle is how well it allows such pieces to fit into the overall puzzle. The hope is that it will make the task of building VEs “as hard as it should be”, avoiding the feeling that one's efforts are mostly spent “fighting the system”. So far, given a little familiarity with the approach, this looks very promising.

Recent Maverik developments include a novel force-field navigation algorithm to guide participants around obstacles, and an algorithm for probing geometry ahead of a user to test whether it can be climbed—for example, ladders and steps (see Figure 8). These algorithms integrate into Maverik, but at the time of this writing are released as separate beta sources for testing.

Maverik has no support for audio or video within it. You will need to add your favourite mechanism for those yourself.

Maverik is a single-user VE micro-kernel. It does not include any assistance for running multiple VEs on different machines for sharing a world between people. To do that, you would need to synchronize the VEs yourself across the network. For just navigating around a shared VE, that's not so hard. Running a system with many users across wide area networks with multiple VEs, applications, interaction and behaviour is a more complex challenge. It's a challenge we are working on now with a complementary system that uses Maverik. We aim to release what we have on that under the GNU GPL license later this year.

We are very keen to know how well these ideas work, or don't work, for other people—particularly those with experience of graphics programming. That way, we get to understand more about this whole area. Feedback should be addressed to maverik@aig.cs.man.ac.uk.